12 min read

Jan 30, 2026

Table of contents

01 1. JVM Garbage Collection Pauses02 2. Mapping Explosion03 3. Oversharding (or Undersharding)04 4. Deep Pagination Performance Cliff05 5. Split-Brain and Data Loss06 6. Eventual Consistency Surprises07 7. Security Misconfigurations08 8. Monitoring Complexity09 9. Data Pipeline Sync Issues10 10. Infrastructure Cost11 The Alternative: Keep Search in Postgres12 Get Started in 5 Minutes13 Learn MoreElasticsearch may work great in initial testing and development but Production is a different story. This blog is about what happens after you ship: the JVM tuning, the shard math, the 3 AM pages, the sync pipelines that break silently. The stuff your ops team lives with.

After years of teams running Elasticsearch in production, certain patterns keep emerging. The same issues show up in blog posts, Stack Overflow questions, and incident reports. We've compiled ten of the most common ones below, with references to the engineers who've documented them. We’ve also added images to make it easy to quickly skim through it and compare the challenges against Postgres.

TLDR: With great power comes great operational complexity.

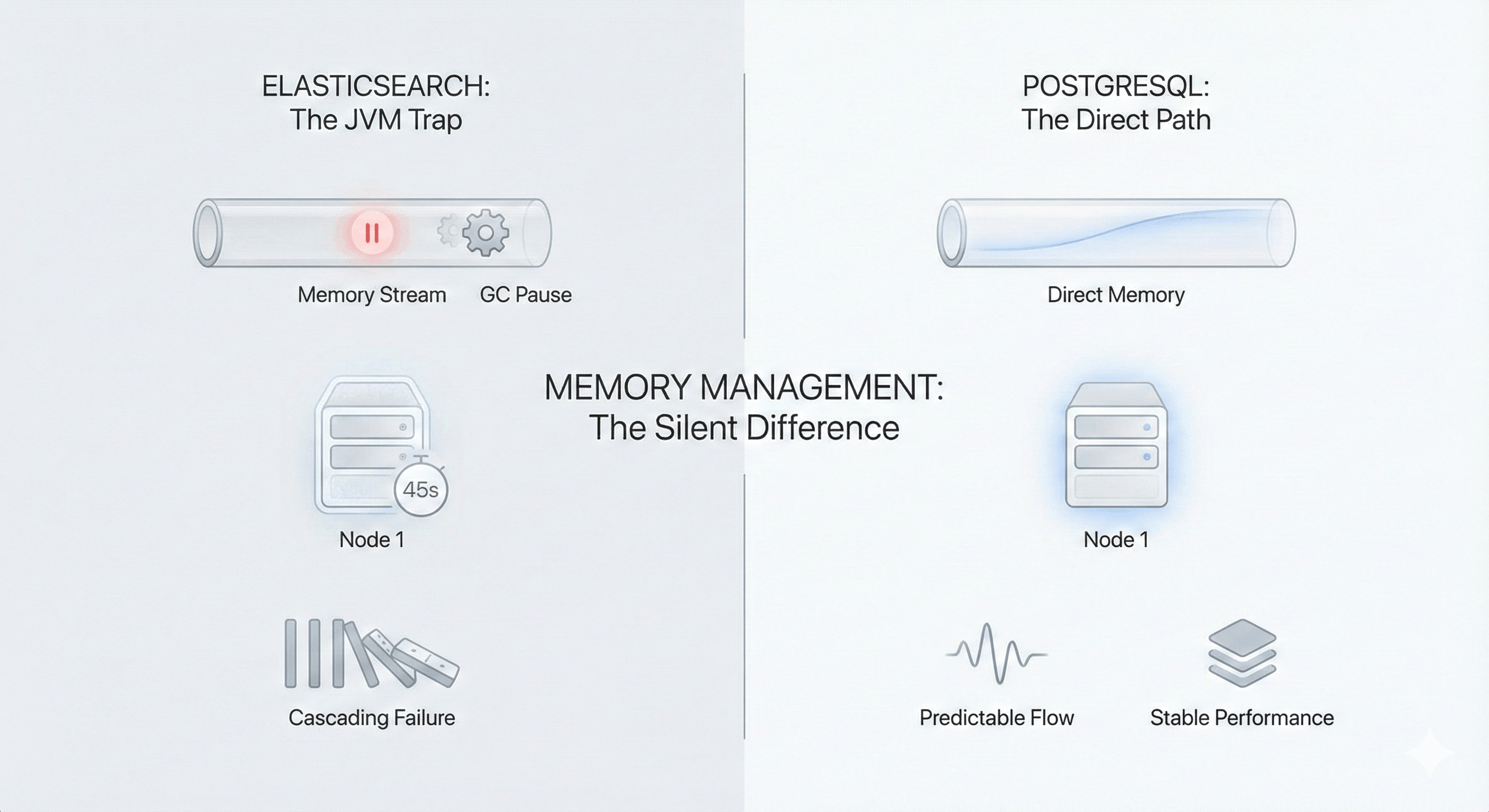

Elasticsearch runs on the Java Virtual Machine (JVM). This means garbage collection (GC) is part of your life.

The problem: Java periodically pauses everything to clean up unused memory. These "stop-the-world" pauses can freeze your Elasticsearch node for seconds at a time. If the pause lasts longer than 30 seconds, the cluster assumes the node is dead and starts moving data around to compensate. Now you have a cascading failure.

Say for example, your e-commerce site is running on Black Friday. Traffic spikes, memory fills up, and Java decides it's time to clean house. Search goes unresponsive for 45 seconds. The cluster panics, starts redistributing shards, and suddenly you're dealing with a full outage instead of a brief slowdown.

References:

Why Postgres avoids this: Postgres is written in C and manages memory directly. There's no JVM, no garbage collection, and no "stop-the-world" pauses. Memory usage is predictable and grows linearly with your workload. You still need to tune shared_buffers and work_mem, but you're not fighting a runtime that can freeze your entire process.

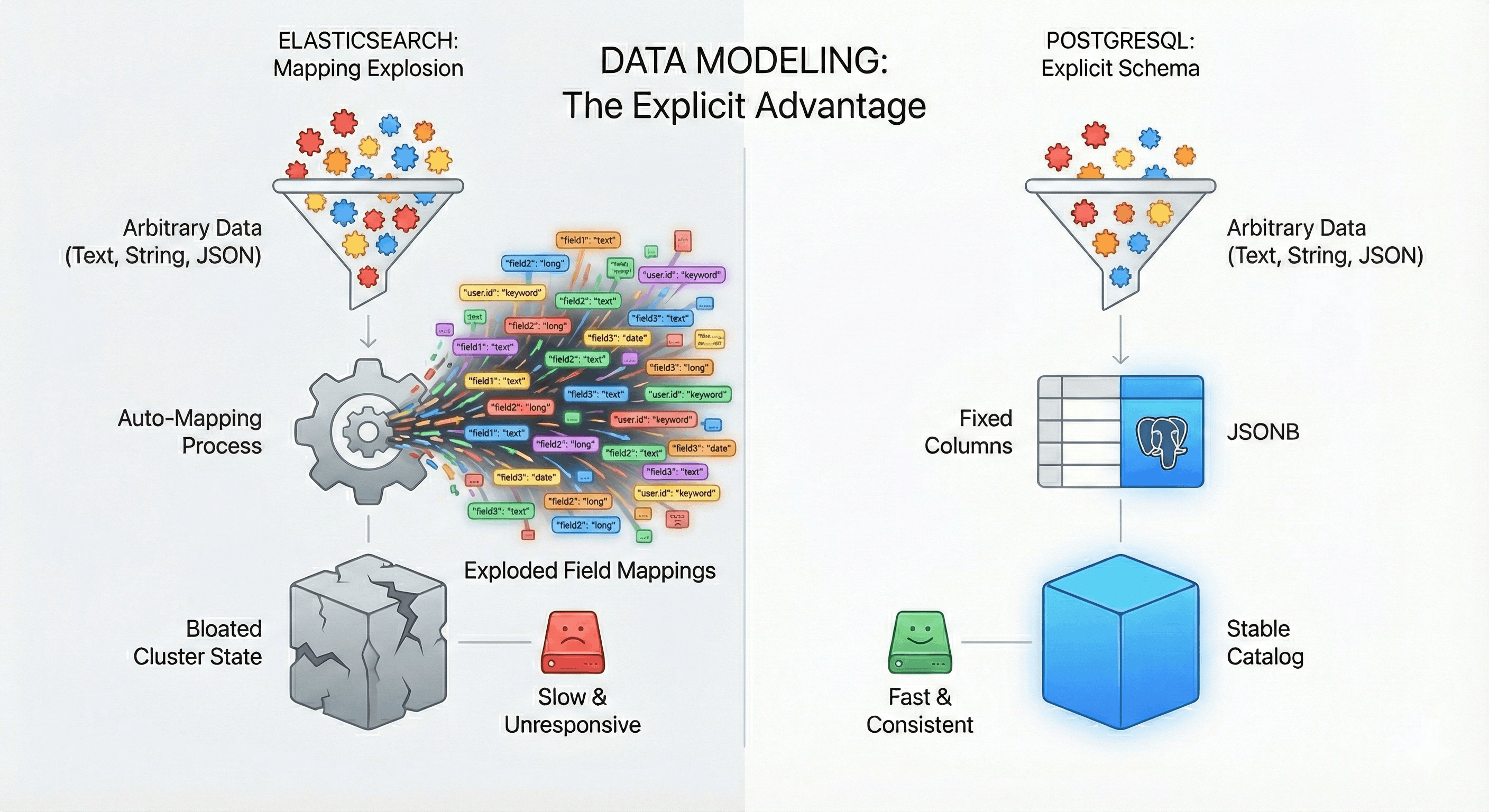

Elasticsearch tries to be helpful by automatically detecting the type of data you're storing. Send it a field called user_id with a number, and it remembers "user_id is a number." Convenient, right?

The problem: This "helpful" feature becomes a nightmare with semi-structured data. If your application logs include arbitrary metadata keys, or your users can create custom fields, Elasticsearch creates a new mapping for each one. Thousands of fields later, your cluster state is massive, your master node is struggling, and everything slows to a crawl.

Say you're logging API requests and including the request body as JSON. One customer sends a payload with 500 unique keys. Elasticsearch dutifully creates 500 new field mappings. Multiply that by thousands of requests, and suddenly you have tens of thousands of fields. Your cluster becomes unresponsive, and you're scrambling to figure out why.

References:

Why Postgres avoids this: Postgres requires you to define your schema upfront. You decide what columns exist. If you need flexible data, you use JSONB—it stores arbitrary JSON without creating new columns or bloating system catalogs. You can still index specific paths inside the JSON when needed. The schema is explicit, which means surprises are rare.

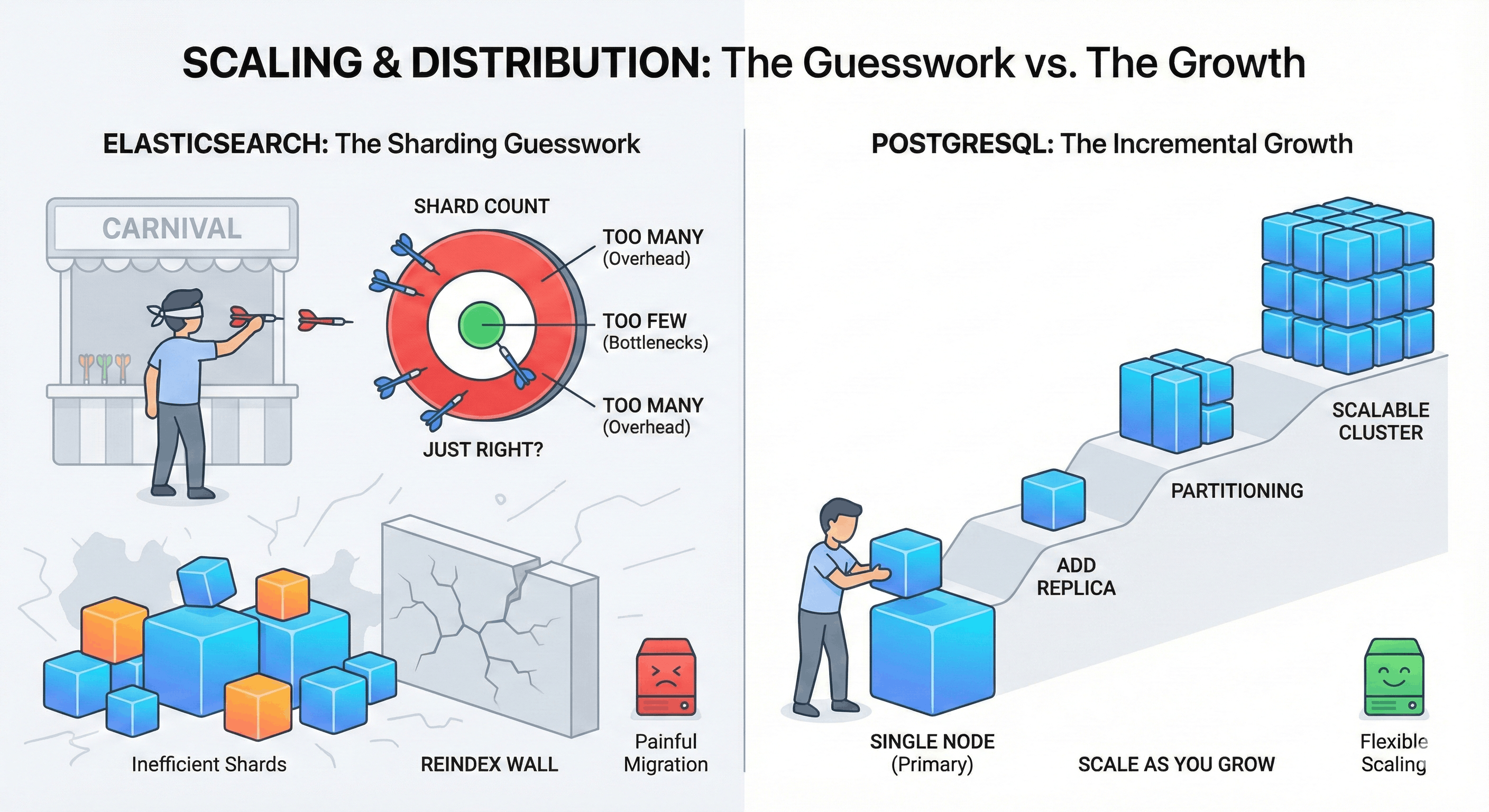

Elasticsearch splits your data into "shards" to distribute it across machines. You have to decide how many shards to use when you create an index. Choose wisely, because you can't change it later without rebuilding everything.

The problem: Too many shards? Each one consumes memory, CPU, and file handles. You're wasting resources on overhead. Too few shards? You can't parallelize queries effectively, and recovery takes forever when a node dies. And here's the kicker: there's no formula. It depends on your data size, query patterns, hardware, and growth rate. It's guesswork.

Say you create an index with 5 shards because that's the default. Six months later, your data has grown 10x, and those 5 shards are now bottlenecks. Your only option? Create a new index with more shards and reindex all your data. Hope you have the disk space and time for that migration.

References:

Why Postgres avoids this: Postgres doesn't shard by default—your data lives in tables on a single node. When you need to scale reads, you add replicas. When tables get large, you use declarative partitioning (by time, by tenant, etc.). These decisions can be made later, as your data grows, rather than upfront when you're guessing. And if you do need horizontal sharding someday, tools like Citus exist. But most applications never get there.

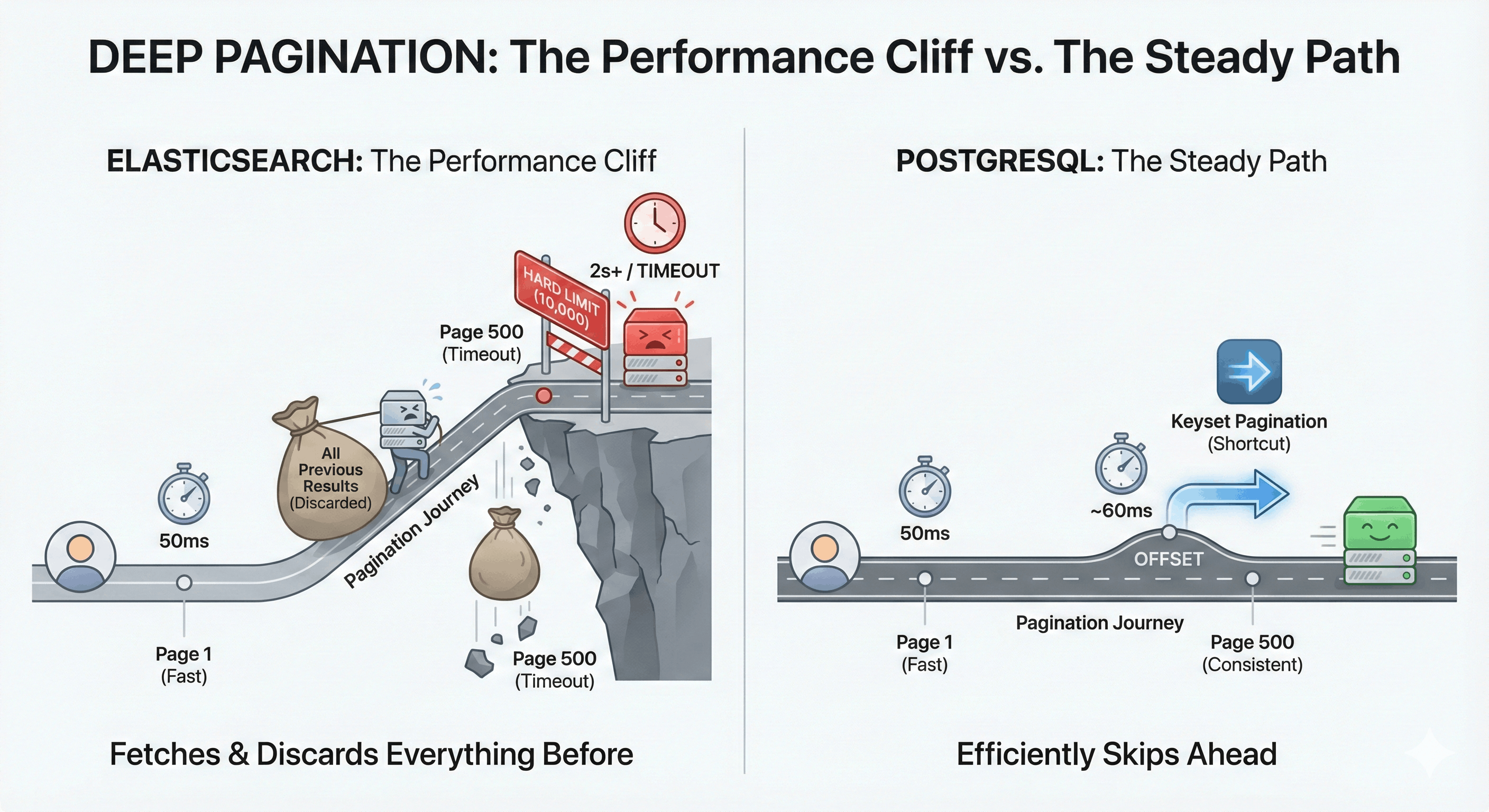

Users click "next page" a lot. They expect page 500 to load as fast as page 1. Elasticsearch has other plans.

The problem: When you ask for page 500 (results 5000-5010), Elasticsearch doesn't skip to result 5000. It fetches and ranks all 5010 results, then throws away the first 5000. Every node in your cluster does this work, then sends results to a coordinator that combines them. The deeper you paginate, the more work everyone does.

Say a user searches your product catalog and starts browsing. Page 1 loads in 50ms. Page 10 takes 200ms. By page 100, it's taking 2 seconds. Page 500? Timeout. Elasticsearch actually has a hard limit (index.max_result_window, default 10,000) specifically because this gets so bad.

References:

search_after for deep paginationWhy Postgres avoids this: LIMIT 10 OFFSET 5000 in Postgres doesn't have a hard ceiling like ES. The query planner handles it without needing to score every document. That said, large offsets still have overhead—keyset pagination (WHERE id > last_seen_id) is better for deep pages. But you won't hit a wall at 10,000 results, and you won't see the dramatic slowdown ES has.

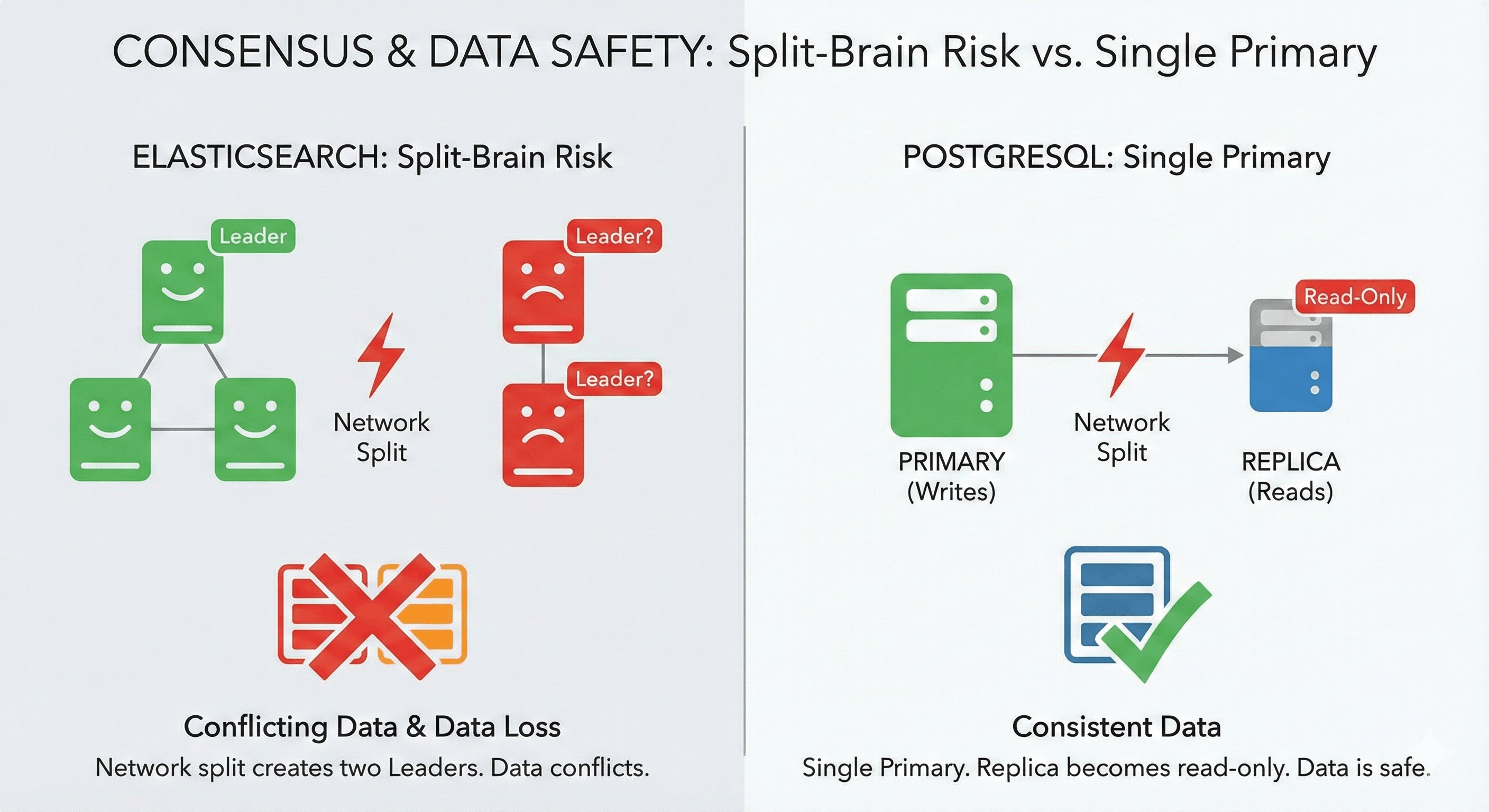

Elasticsearch is a distributed system. Distributed systems fail in distributed ways.

The problem: Imagine your cluster has 5 nodes, and a network issue splits them into two groups: 3 nodes on one side, 2 on the other. Both groups might elect their own master and start accepting writes independently. When the network heals, you have two conflicting versions of your data. This is "split-brain," and it can cause permanent data loss.

Say your Elasticsearch cluster spans two data centers for redundancy. A network blip between them lasts 60 seconds. Both sides elect a master. Users on both sides keep searching and indexing. When connectivity returns, some documents exist in one half, some in the other, and some have conflicting updates. Reconciling this manually is a nightmare.

References:

Why Postgres avoids this: Postgres uses a simpler primary/replica model. There's one primary that accepts writes; replicas are read-only. This doesn't make split-brain impossible—any distributed system can have it—but the architecture makes it much harder to create accidentally. Tools like Patroni, pg_auto_failover, and managed services (like Tiger Data) handle leader election and fencing automatically. You're not managing quorum math yourself.

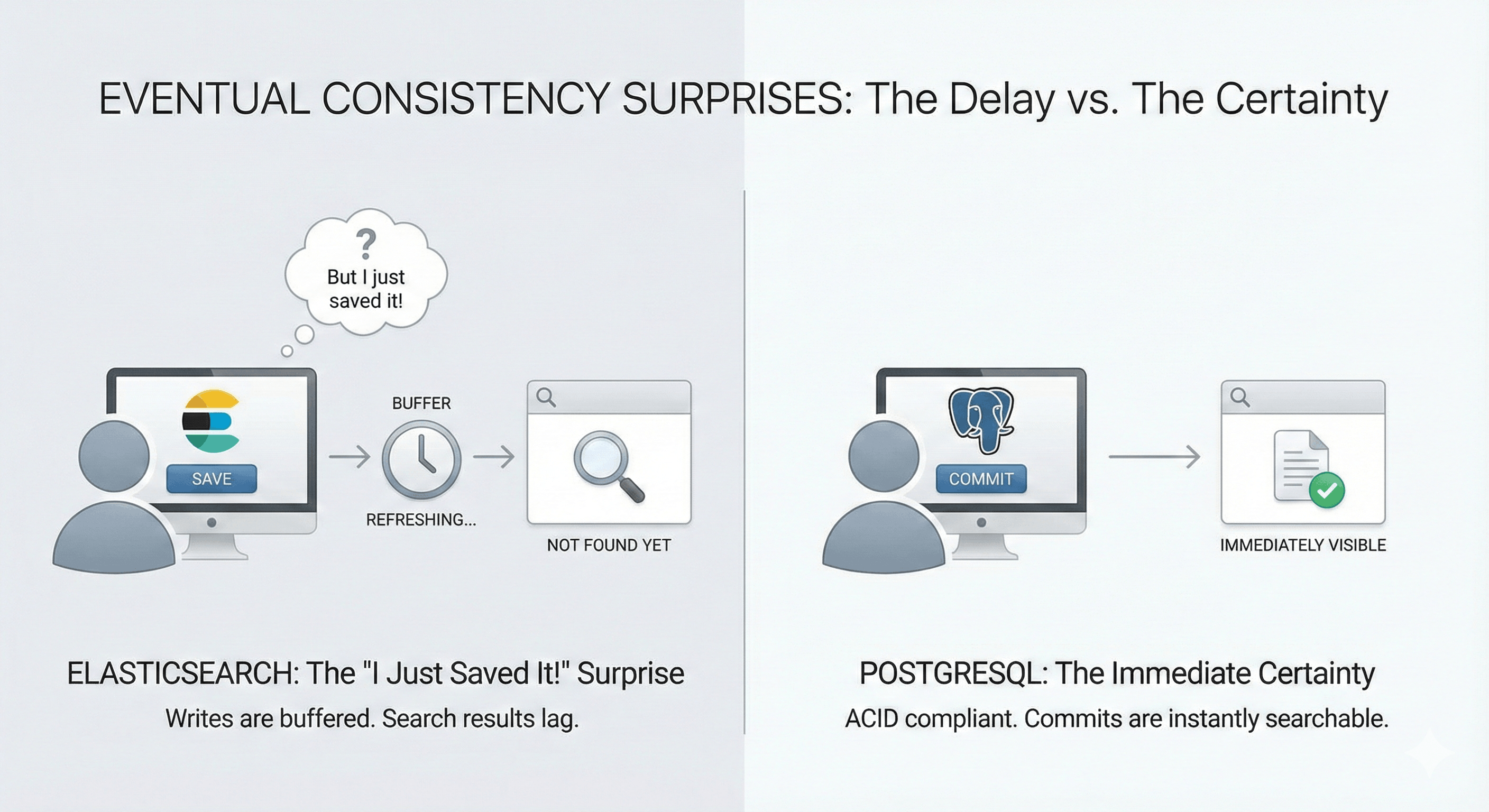

When you save something to Elasticsearch, it's not immediately searchable. This catches a lot of teams off guard.

The problem: Elasticsearch buffers writes and periodically "refreshes" to make them searchable (default: every 1 second). But under load, or with specific configurations, that delay can grow. Users create a record, immediately search for it, and get nothing. "But I just saved it!"

Say a user posts a comment on your platform. Your app saves it to Postgres (source of truth) and indexes it to Elasticsearch. The user refreshes the page to see their comment. The Postgres query shows it, but the search results don't include it yet because Elasticsearch hasn't been refreshed. The user thinks something is broken and posts again. Now you have duplicate comments.

References:

Why Postgres avoids this: Postgres is ACID-compliant. When your transaction commits, the data is immediately visible to all subsequent queries—on the same connection or any other. If you're using BM25 search via pg_textsearch, the index updates synchronously—no refresh interval. No eventual consistency, no "I just saved it but can't find it" bugs.

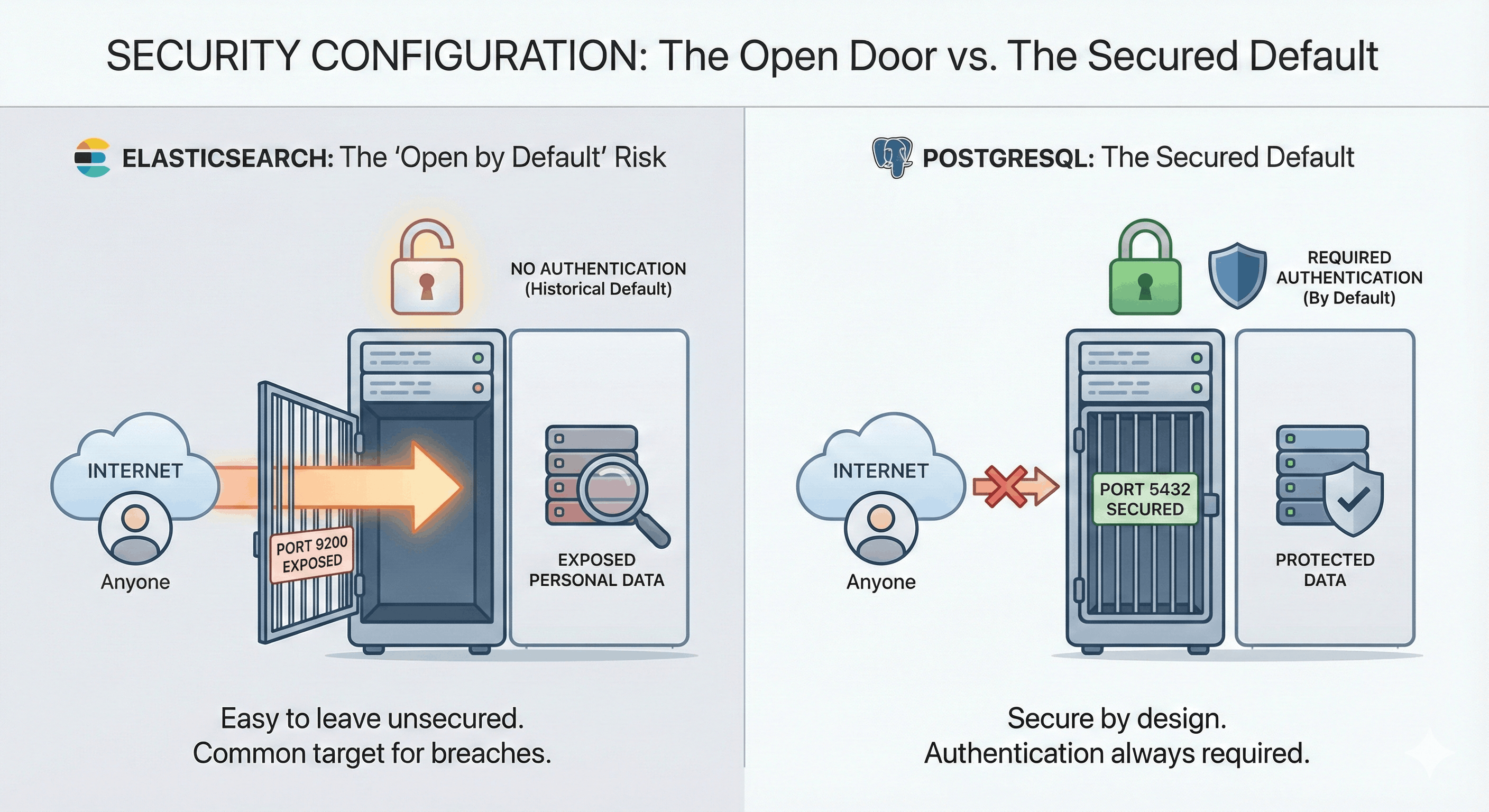

Elasticsearch has been responsible for some of the largest data breaches in history. Not because it's insecure by design, but because it's easy to misconfigure.

The problem: For years, Elasticsearch shipped with no authentication by default. Spin up a cluster, expose port 9200, and anyone on the internet could read your data. While newer versions have improved, the damage is done: countless clusters were exposed, and security misconfigurations remain common.

Here's a real example: in 2019, researchers found an exposed Elasticsearch server containing 1.2 billion records of personal data—social media profiles, email addresses, phone numbers. The cluster had no authentication. This wasn't a sophisticated hack; someone just found an open port. Similar breaches happen regularly.

References:

Why Postgres avoids this: Postgres has required authentication from day one. You literally cannot connect without credentials—there's no "open by default" mode. SSL/TLS encryption, role-based access control, and row-level security are all built in and battle-tested over decades. Misconfiguration is still possible (any system can be misconfigured), but the secure path is the default path.

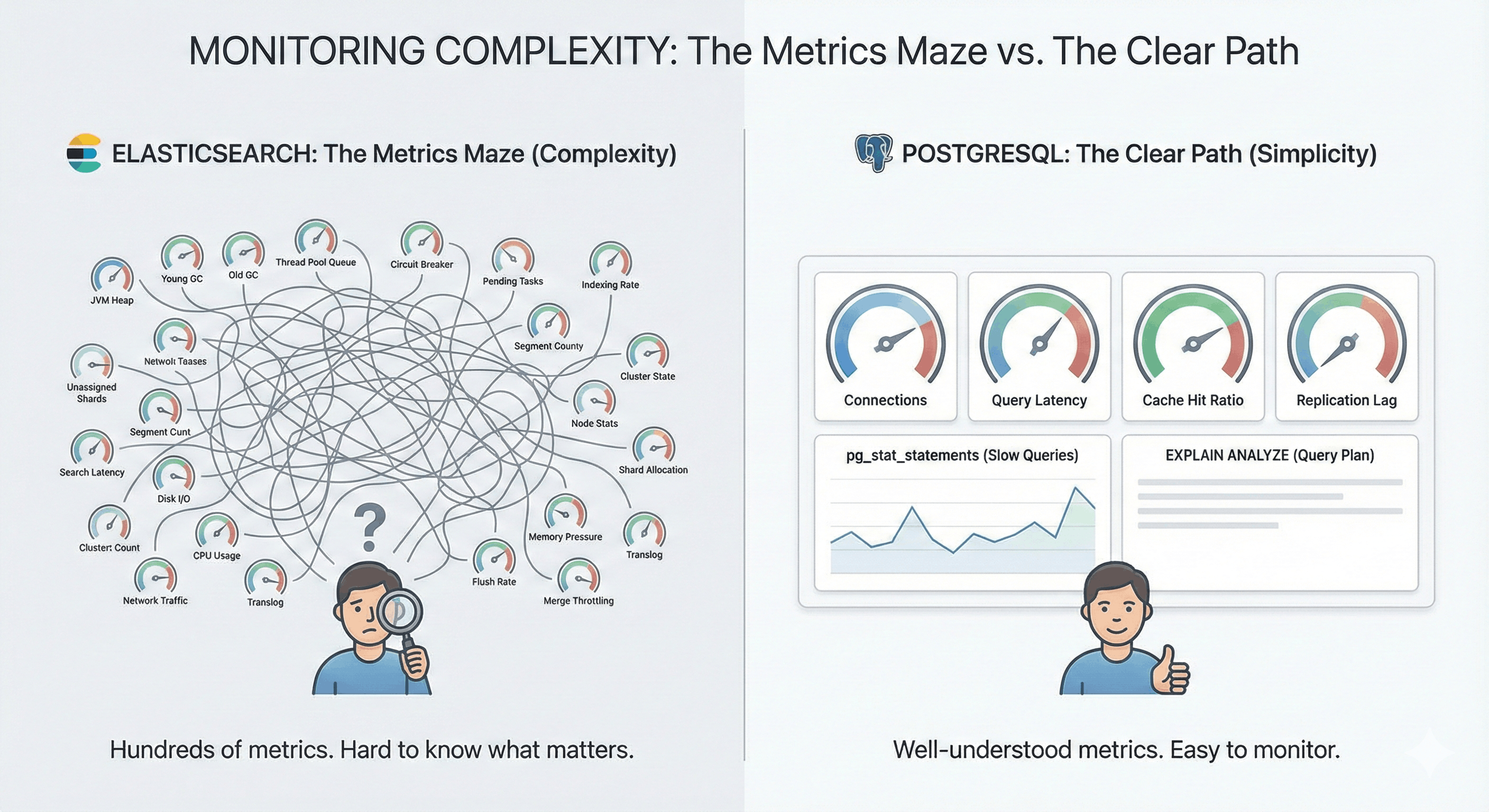

Elasticsearch gives you hundreds of metrics. The problem is knowing which ones matter.

The problem: JVM heap usage, young GC time, old GC time, thread pool queue sizes, circuit breaker trips, pending tasks, unassigned shards, indexing rate, search latency, segment count... Elasticsearch exposes all of this, but understanding what's normal vs. concerning requires deep expertise. Most teams set up basic monitoring, miss the early warning signs, and only find out something's wrong when it's already broken.

Say your cluster has been running fine for months. One day, search latency spikes. You check CPU and memory—they look okay. Disk? Fine. What you didn't notice: the "pending tasks" queue has been growing for days, and the "circuit breaker" tripped twice last week. By the time you figure this out, users have been complaining for hours.

References:

Why Postgres avoids this: Postgres monitoring is well-understood after 30+ years. The key metrics are simpler: connections, query latency, cache hit ratio, replication lag. pg_stat_statements shows your slowest queries. EXPLAIN ANALYZE tells you exactly why. Tools like pgBadger, pg_stat_activity, and any standard APM will get you 90% of the way there. You don't need to become a Postgres internals expert to keep it healthy.

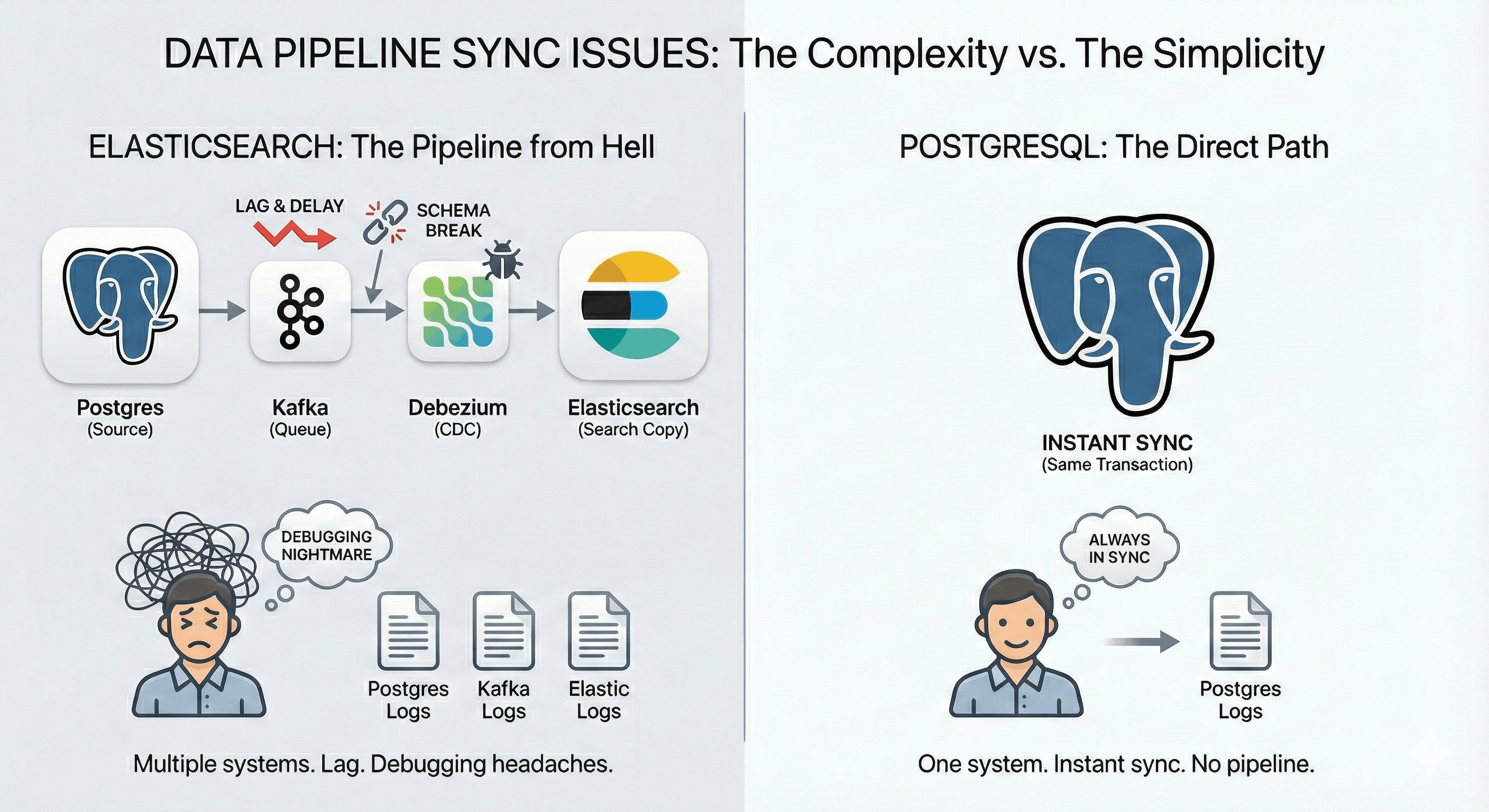

Your real data lives in Postgres. Elasticsearch is just a copy for search. Keeping them in sync is harder than it sounds.

The problem: The typical architecture is Postgres → Kafka/Debezium → Elasticsearch. That's three systems. When data updates in Postgres, it flows through Kafka, gets transformed, and lands in Elasticsearch. What could go wrong? Everything. Kafka lag causes stale search results. Schema changes break the connector. A bug in your transformer corrupts data. And when something breaks, you're debugging across three different systems with three different log formats.

Say a customer updates their email address. The change saves to Postgres immediately. But the Kafka consumer is backed up, and Elasticsearch doesn't get the update for 10 minutes. During that window, any email sent via a search lookup goes to the old address. Customer support gets an angry call. You spend two hours figuring out where the delay happened.

References:

Why Postgres avoids this: If search lives in Postgres (using BM25 via pg_textsearch), there's no pipeline to manage. When you update a row, the search index updates in the same transaction. No Kafka, no Debezium, no sync jobs, no lag, no "which system has the latest data?" debugging. Your source of truth and your search index are the same thing.

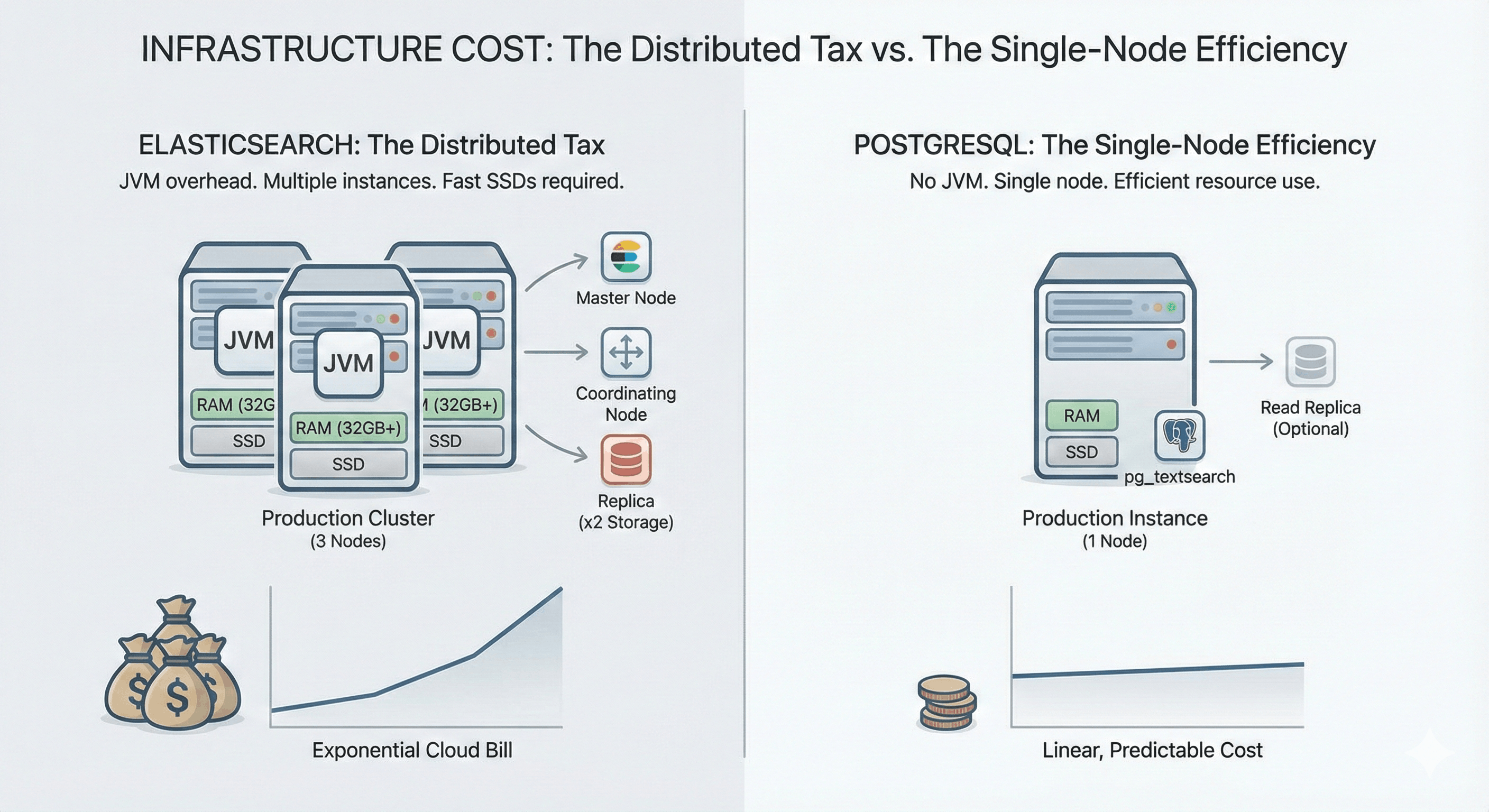

Elasticsearch clusters are expensive to run. This isn't a bug—it's the architecture.

The problem: ES runs on the JVM, which means each node needs significant RAM (typically 16-64GB, with half allocated to heap). Production clusters require at least 3 nodes for high availability and quorum. Larger deployments need dedicated master nodes, coordinating nodes, and data nodes—that's a lot of instances. And because ES stores everything in Lucene segments, you need fast SSDs to avoid I/O bottlenecks. Add replicas for redundancy, and your storage costs multiply.

Say you spin up a "small" production cluster: 3 nodes, 32GB RAM each, SSDs. That's your baseline before you've even scaled. Now add a separate cluster for staging. And one for testing. Your cloud bill starts climbing, and you're not even at high traffic yet.

References:

Why Postgres avoids this: Postgres runs on a single node for most workloads. No JVM overhead means lower memory requirements. You add read replicas when you actually need them, not because the architecture demands it. A single Postgres instance with pg_textsearch can handle substantial search workloads on hardware that would barely run one ES node. And if you're on a managed service like Tiger Data, you're paying for one database, not a distributed cluster.

If you've made it this far, you've probably nodded along to at least a few of these issues. Maybe all ten. The question is: what do you do about it?

Can you overcome all these issues? Yes. Hire an Elasticsearch specialist (or a team of them). Invest in training. Build expertise over years. Companies do it every day.

But here's the question: do you want to?

It's like the shift from gas cars to electric cars. When you go electric, you don't just swap out the engine. You eliminate an entire category of problems: no oil changes, no transmission repairs, no spark plugs, no fuel injectors, no exhaust systems. The complexity just disappears.

That's what's happening with search. Extensions like pg_textsearch (BM25 ranking), pgvectorscale (vector search), and pgai (automatic embeddings) mean search can now live inside Postgres. No separate cluster. No JVM. No data pipelines. No sync jobs. A whole category of problems just goes away.

Every issue above has the same root cause: Elasticsearch is a separate system. Separate systems mean separate infrastructure, separate expertise, separate failure modes, and separate pipelines.

What if you didn't need a separate system?

PostgreSQL now has:

And for AI workflows, there's a bonus: when everything lives in Postgres, you can fork your database and get an instant copy of your search index, vectors, and embeddings. Try doing that with Elasticsearch.

For many workloads, "just use Postgres" isn't a compromise. It's a simplification.

One caveat: pg_textsearch doesn't currently support faceted search, so e-commerce with "filter by brand/price/color" is still a gap. For RAG, document search, and knowledge bases, you're covered.

Ready to try hybrid search in Postgres? Here's all you need:

-- 1. Enable extensions

CREATE EXTENSION pg_textsearch; -- BM25 keyword search

CREATE EXTENSION vectorscale CASCADE; -- Vector search (includes pgvector)

CREATE EXTENSION ai; -- Auto-embedding generation (optional)

-- 2. Create your table

CREATE TABLE documents (

id SERIAL PRIMARY KEY,

title TEXT,

content TEXT,

embedding vector(1536)

);

-- 3. Create indexes

CREATE INDEX idx_bm25 ON documents USING bm25(content)

WITH (text_config = 'english');

CREATE INDEX idx_vector ON documents USING diskann(embedding);

-- 4. Hybrid search with RRF (one query, two search types)

WITH

bm25_results AS (

SELECT id, ROW_NUMBER() OVER (

ORDER BY content <@> to_bm25query('your search query', 'idx_bm25')

) as rank

FROM documents

LIMIT 20

),

vector_results AS (

SELECT id, ROW_NUMBER() OVER (

ORDER BY embedding <=> $1 -- $1 is your query embedding

) as rank

FROM documents

LIMIT 20

)

SELECT d.*,

COALESCE(1.0/(60 + b.rank), 0) + COALESCE(1.0/(60 + v.rank), 0) as rrf_score

FROM documents d

LEFT JOIN bm25_results b ON d.id = b.id

LEFT JOIN vector_results v ON d.id = v.id

WHERE b.id IS NOT NULL OR v.id IS NOT NULL

ORDER BY rrf_score DESC

LIMIT 10;

That's it. BM25 + vectors + RRF, all in Postgres. No Elasticsearch, no Kafka, no sync jobs.

Try it on Tiger Data—all extensions come pre-installed.

Receive the latest technical articles and release notes in your inbox.