20 min read

Oct 29, 2025

Table of contents

01 TL;DR:02 Introduction03 Thesis and Motivation04 Why We Had to Build Our Own05 Goals and Principles06 Architecture07 Outcomes and Capabilities08 Operations and Availability09 ClosingFluid Storage is a new next-generation storage architecture: a distributed block layer that reimagines systems like EBS, combining zero-copy forks, true elasticity, and synchronous replication. Because it operates at the block layer, Fluid Storage is fully compatible with Postgres, and even with other databases and file systems as well. Every database in Tiger Cloud’s free tier now runs on Fluid Storage, giving developers direct access to these capabilities.

Try it now: Get started instantly with the Tiger CLI and MCP Server.

Partner with us: If you’re building an agentic or infrastructure platform, we’re opening early-access partnerships. Please get in touch to learn more.

Agents are the new developers, and they need a new storage layer built for how they work.

Agents spin up environments, test code, and evolve systems continuously. They need storage that can do the same: forking, scaling, and provisioning instantly, without manual work or waste.

Storage itself has evolved through eras: from static systems built for durability, to dynamic systems built for elasticity through innovations like separating compute from storage. But today’s “elastic” infrastructure isn’t truly elastic. After operating tens of thousands of Postgres instances in Tiger Cloud, we’ve seen those limits firsthand: systems that scale slowly, waste capacity, and block iteration. We’re now entering the era of fluid systems: storage that moves as continuously as the workloads it serves.

Fluid Storage was built for that world: where data flows, systems iterate autonomously, and elasticity and iteration converge into a single operation.

At its core, Fluid Storage is a distributed block layer that unifies these properties through a disaggregated architecture. It combines a horizontally scalable NVMe-backed block store, a proxy layer that exposes copy-on-write volumes, and a user-space storage device driver that makes it all look like a local disk to PostgreSQL. The result: instant forks and snapshots, automatic scaling up or down, and no downtime or over-provisioning.

Each Fluid Storage cluster manages tens of thousands of volumes across workloads and tenants—from ephemeral sandboxes to production-scale systems—with consistent performance and predictable cost. In benchmark testing, a single volume sustains 110,000+ IOPS and 1.4 GB/s throughput while retaining all copy-on-write and elasticity guarantees.

It’s storage that looks like a local disk but scales like a cloud service. A new storage foundation for the age of agents.

Fluid Storage now runs every free-tier database in Tiger Cloud, giving every developer (and agent) a firsthand experience of what truly fluid infrastructure feels like. Sign up in the Tiger console or get started instantly with the Tiger CLI.

Elastic storage isn’t truly elastic.

Every engineer who’s managed databases at scale has seen it firsthand. A CI pipeline stalls waiting for a restore. A schema migration hangs mid-run because the database can’t be safely cloned. A “resize volume” request on EBS sits in “optimizing” for hours. A database read replica lags for days behind a write-heavy primary. You wait, sometimes all day, not because Postgres is slow, but because the storage substrate beneath it isn’t nearly as elastic as it claims.

Cloud storage solved many problems, but true elasticity wasn’t one of them. Amazon EBS, for instance, is billed as elastic, yet volumes can only grow once every six to twenty-four hours. You can’t shrink them, and every operation that changes IOPS or throughput has a similar cooldown. Worse, you pay for the space you allocate, not what you actually use. To avoid running out of disk, you over-provision, and that excess sits idle, wasted.

This rigidity made sense in the first two eras of storage. The static era focused on durability: keeping data alive across hardware failures. The dynamic era focused on decoupling compute and storage so each could scale independently. Both have become table stakes. We believe that the next era is the fluid era: storage that scales, forks, and contracts instantly. Not just incrementally elastic, but continuous. Storage that behaves more like software than hardware.

The arrival of agents makes this shift urgent. Agents create, modify, and deploy code autonomously. They spin up sandboxes, run migrations, benchmark results, and tear everything down again, all in seconds. Each agent needs its own isolated, ephemeral environment, but with the performance and durability of production, operating on production data. This is not just experimentation; this is how agents work. Traditional storage can’t keep up with that pace or economics.

Fluid Storage was built for this reality: a distributed block store that unifies elasticity, iteration, and durability in a single substrate. It treats scaling and forking as standard operations, not exceptions. To Postgres, it looks like a normal disk. In truth, it’s a disaggregated system that delivers high throughput, fast recovery, and instant forks—storage that finally moves as fluidly as the systems built on top of it.

Fluid Storage already now serves as the default substrate for our recently-announced free database service on Tiger.

For the past five years, we’ve managed tens of thousands of Postgres instances in Tiger Cloud and seen firsthand the limitations of today’s “elastic” cloud infrastructure.

When we launched Tiger Cloud in 2020, we built on Amazon EBS as our durable storage. It was reliable, well-understood, and integrated neatly with the rest of AWS. But as our fleet scaled into the thousands of customers, the limitations of EBS became clear across five dimensions: cost, scale-up performance, scale-down performance, elasticity, and recovery.

Cost.

EBS charges for allocation, not usage. A database storing 200 GB of data on a 1 TB provisioned volume still pays for the full terabyte. Tiger Cloud hides this complexity from users—we bill for storage consumed, not allocated—but that simply shifts the inefficiency from the user to us. It becomes a COGS problem instead of a usability one.

To manage it, we built adaptive algorithms that estimate a “good” allocation size based on each user’s consumption and rate of change. In practice, this was a constant balancing act: undershoot and risk running out of disk; overshoot and pay for unused capacity. For safety and user experience, we erred on the side of over-allocation.

The problem of EBS cost only compounds as customers scale their services horizontally: every read replica doubles the storage cost, since EBS charges per volume, not per dataset.

This cost impacts both sides: vendors through higher COGS, and customers through higher storage prices (which is why usage-based storage always costs more than allocation-based pricing on a per gigabyte basis).

Scale-up performance.

EBS volumes impose fixed ceilings on performance. Until recently, gp3 volumes topped out at 16,000 IOPS and 16 TB per volume, while io2 volumes offered higher limits—up to 64,000 IOPS and 64 TB—but at far higher cost. Those gp3 limits have recently improved, but they also don’t address another scaling challenge: the difficulty of scaling horizontally through snapshots.

Ideally, you’d take a volume snapshot and use it to initialize a new read replica, seeding it with an exact copy of data before WAL replay begins. In theory, EBS snapshots allow this. In practice, they hydrate slowly. A restored snapshot appears immediately available, but data is fetched lazily from S3, so the replica experiences high read latency until the volume is fully loaded. We implement pre-warming strategies informed by a database’s real usage statistics, but these are effective primarily for small databases. For large, mission-critical ones, pre-warming the full working set simply takes too long.

In our experience operating Tiger Cloud, it was often faster to spin up a database from a Postgres backup stored in S3 than from an EBS snapshot in S3.

Scale-down performance.

EBS also limits how many volumes can attach to a single EC2 instance, currently twenty-four. While Tiger Cloud runs a containerized environment, this cap directly constrains how many database services we can host per server. It makes it difficult to run large numbers of small, mostly idle instances in a cost-efficient way. In effect, EBS became a bottleneck for building a true free tier.

The alternative for a free or low-cost tier that avoided isolated containers and volumes per service—packing multiple logical databases into a single PostgreSQL cluster—would have introduced operational trade-offs we wanted to avoid: little performance isolation, poor backup and restore options, and difficult seamless scaling.

Elasticity.

EBS technically supports resizing, but only once every 6–24 hours. That cooldown applies not just to disk capacity increases, but also to any changes in IOPS or throughput. Further, you can grow capacity, but you can’t shrink, and you can’t adjust continuously. We constantly fought these limitations when running our adaptive auto-disk-scaling algorithm.

The result is a system that simulates elasticity rather than embodying it, and these limitations ultimately leaked through to the user experience. (You see similar elasticity limitations in other managed database platforms, e.g., Supabase.)

Failure recovery.

Operational recovery was another bottleneck. In theory, we could detach a failed volume and reattach it to a new node to restore service quickly, even for users who weren’t paying for full HA replication due to cost concerns. In practice, “clean” shutdowns from EBS worked fast (10s of seconds), but any hard failure can often take 10–20 minutes before the EBS volume detaches and becomes available for remounting elsewhere. That delay compounded user downtime precisely when fast recovery mattered most.

Agents further expose these limitations

Agents make these limitations even sharper. They often operate on sandboxed replicas of production to avoid risk, scale up to work, and scale down just as fast when done. Their workloads are ephemeral, demanding storage that can respond instantly. When many agents work on the same data in parallel, cost efficiency becomes critical.

As we began exploring agents for both internal and customer use, it became clear that today’s cloud infrastructure needed to be rethought.

Alternative architectures we discarded

We also evaluated other architectures—most notably local NVMe and Aurora-like page-server systems—but ultimately set them aside.

Local NVMe offers the raw performance we wanted, but not the durability. If an instance fails, the data disappears with it. To compensate, every customer would need a two-or three-node database cluster for redundancy (which is why, for example, PlanetScale Metal requires a minimum of three-node clusters). In our experience, this trade-off is cost prohibitive for many workloads. NVMe also lacks true storage elasticity: scaling a multi-terabyte service requires copying terabytes of data to a new node and failing over, a process that takes hours or even days. Spinning up a read replica is equally slow. The throughput and latency numbers look impressive in isolation, and NVMe remains a great substrate for caching, but it revives the pre-cloud model—dedicated boxes with fixed compute and disk—which isn’t the foundation for an elastic, managed database platform.

Another option was a distributed page-server architecture, first introduced by Amazon Aurora and later adopted by Neon and Google AlloyDB. These systems replace PostgreSQL’s local storage layer with remote operations to a distributed page-storage service that stores pages remotely and uses a separate distributed log for write-ahead logging. It’s an elegant design for elasticity, but it comes at a cost: achieving this requires forking PostgreSQL and rewriting significant portions of its storage internals to communicate with the new remote layer. That coupling introduces real risks. Maintaining parity with upstream PostgreSQL becomes a constant need as new versions evolve; features that depend on storage semantics must be reimplemented and/or revalidated; and debugging and performance tuning also shift from a well-understood ecosystem to a proprietary one. And the result is a database-specific system, rather than a general-purpose storage substrate.

We chose to solve these problems at the storage layer. Elasticity, durability, and iteration should be properties of the underlying substrate, not features baked into a single database engine. By working at the block-storage level, we could keep PostgreSQL unchanged while making the system extensible to any workload that runs on disks. A forkable storage layer offers a more general foundation: volumes that can be cloned, branched, or scaled independently of the systems that use them (and not limited to Postgres databases).

Fluid Storage emerged from that design choice. A distributed system built for strong durability, true elasticity, and zero-copy forks. It charges for storage consumed, not allocated, and scales fluidly in both directions. Not a faster EBS, but a storage system re-architected for true elasticity and native iteration.

When we set out to design our storage architecture, the goal wasn’t just to make storage faster. It was to make it behave differently, to meet the evolving needs of existing workloads and the new needs of agentic workloads. We focused on six core objectives:

Fork-first. Forks, clones, and snapshots aren’t exceptional operations; they’re primitives. Copy-on-write happens at the block layer, and creating a new volume or database fork is a highly-efficient metadata update, not a data copy. With such capabilities, one can quickly branch full data environments and test changes in isolation, all without copying or reloading data.

Truly elastic, in both directions. Volumes can scale up or down fluidly, adapting to changing workloads and costs. Storage expands as data grows and contracts as it’s deleted or pruned. Developers or platform operators no longer need to over-provision storage based on future need, and are not prevented from downscaling if needed.

Usage-based and resource-efficient. You pay for what you use, not what you allocate. Fluid Storage’s multi-tenant design automatically reclaims unused space. Efficiency comes from the architecture itself, not by hiding platform-level waste.

Predictable performance. Elasticity shouldn’t mean variability. Fluid maintains stable latency and throughput across tenants through intelligent scheduling and load-balanced data sharding.

Postgres-compatible foundation. Applications see a normal Linux block storage device, so PostgreSQL—and any other database or file system—runs unmodified. This ensures immediate compatibility with existing tooling and software systems.

Built for both developers and platforms. A single developer can spin up a fork per commit; a platform operator can run thousands of Fluid-backed databases, each isolated, elastic, and cost efficient.

The next section describes how the architecture implements them in practice.

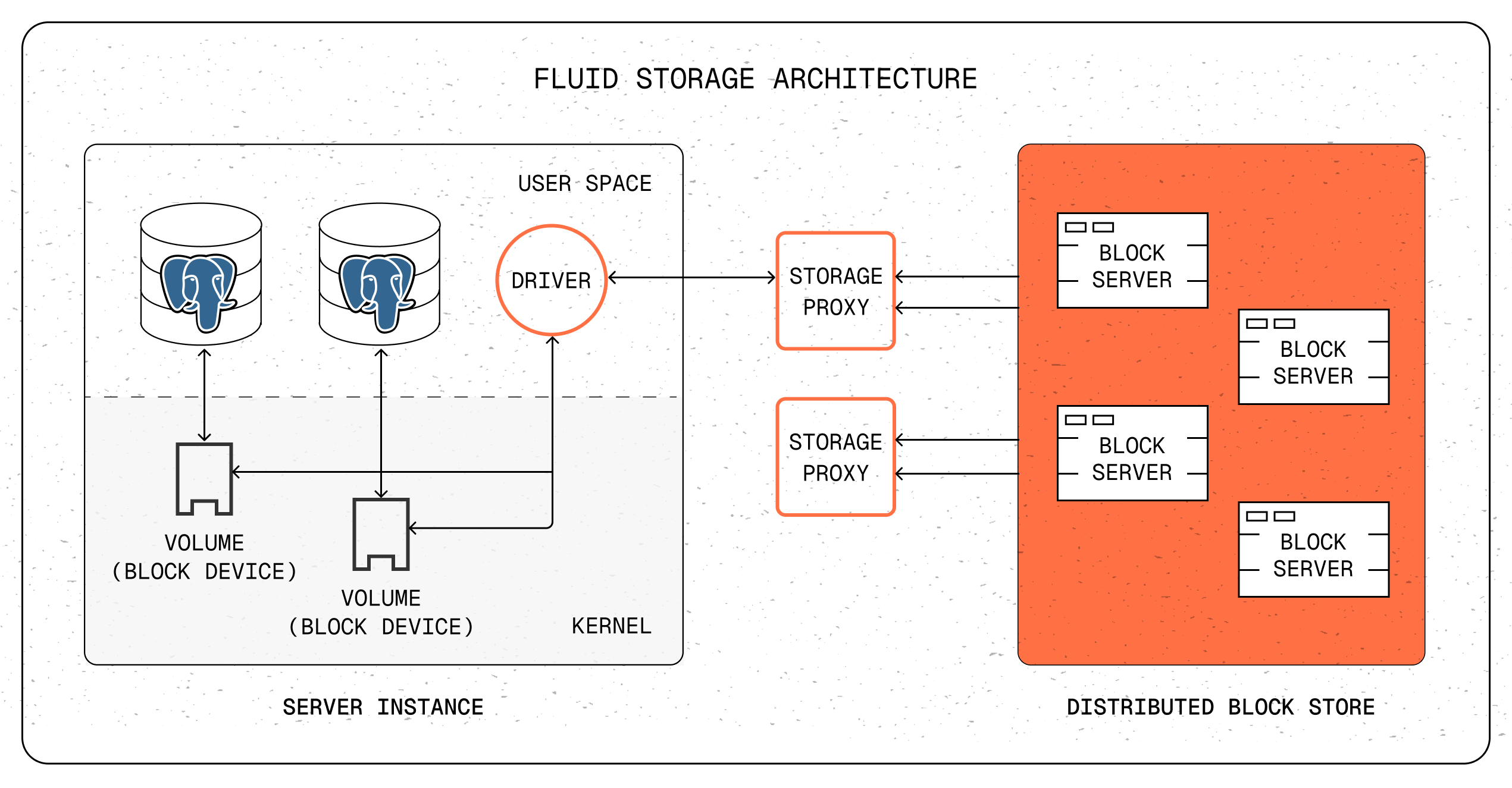

Fluid Storage consists of three cooperating layers, each designed to make elasticity and iteration first-class operations within the storage substrate itself, not conveniences layered on top.

Fluid Storage consists of three cooperating layers:

The lowest layer of Fluid Storage is the DBS, a transactional distributed key-value store that provides elastic, horizontally scalable block storage. DBS runs on local NVMe drives for performance. Volumes are divided into shards, each shard mapped to a replica set within the cluster. This architecture allows a single logical volume to scale seamlessly by spreading I/O across shards (and therefore many block servers) in the cluster. Cluster capacity can be dynamically increased or decreased without downtime by adding or removing DBS block servers, and shards are rebalanced transparently across the cluster.

DBS maps block addresses (keys) to disk blocks (values). Every write is versioned, allowing old and new values to coexist until garbage collection reclaims the obsolete ones. Transactions provide atomicity and consistency. Data is replicated synchronously across multiple DBS replicas as part of each write operation, ensuring strong consistency and availability. A single DBS cluster can typically manage 100s of TBs of logical storage—the data visible to clients—while the underlying physical storage is larger to accommodate replication.

The result is a durable, multi-tenant, horizontally scalable storage layer that supports versioned block writes and enables efficient copy-on-write behavior above it.

Above DBS sits a layer of storage proxies, which present virtual volumes to clients and coordinate all I/O. The proxies translate network block operations into DBS reads and writes, manage volume lineage, and enforce per-volume performance and capacity limits.

Each volume in Fluid Storage maintains metadata that tracks which blocks exist in which generation of a volume. This metadata defines the lineage of the volume and enables efficient copy-on-write across snapshots and forks. This metadata answers whether a specific block ID exists in a given generation of volume, yet only adds roughly 0.003% overhead, small enough to retain in memory for fast querying.

When a snapshot is taken, the system increments the parent volume's generation number (say, 6), and the child volume is created starting at that same new generation number (6). The child stores which previous parent generation it was forked from (5), establishing its lineage. Both parent and child now have their own separate generation directories for future writes. They also maintain separate block metadata for their current generation (6), but have the same information for previous generations (0 through 5). This allows the child to locate and read all of the parent's data without copying any actual blocks.

On writes, the proxy allocates a new block in DBS tagged with its volume's current generation number, updates that generation's directory to map the block ID to the new data location, and marks the block as present in that generation. Data from earlier generations remains unchanged.

On reads, the system traverses generations from newest to oldest, checking the block metadata for each generation to see if the block exists there. It stops at the first generation where the block is found and reads from that generation's data. This lookup completes in microseconds, as checking membership is extremely fast.

This lineage mechanism allows multiple volumes to safely share unmodified blocks. Snapshots are fast and storage-efficient, adding only metadata overhead. Forks are similarly fast and zero-copy, duplicating snapshot mappings without data movement. Physical storage grows only as data diverges and new blocks are written.

The storage proxy also provides control and safety at the system boundary. It authenticates clients, maintains secure isolation between tenants, and can enforce per-volume IOPS and storage limits. It can cache frequently accessed blocks to improve locality, though caching is an optimization rather than a requirement.

Because agents often spin up parallel instances of themselves, consistency management had to be lightweight, version-aware, and tolerant of many concurrent forks operating on shared data.

Together, these responsibilities make the storage proxy the coordination and lineage layer of Fluid Storage. It’s the component that turns distributed blocks into coherent volumes.

PostgreSQL expects a block device. Rewriting its storage engine to speak a custom API would break decades of compatibility. So instead, we integrated at the kernel boundary through a user-space storage device driver.

The storage device driver exposes Fluid Storage volumes as standard Linux block devices mountable with filesystems such as ext4 or xfs. It manages multiple I/O queues pinned to CPU cores for concurrency, supports zero-copy operations when possible, and allows volumes to be resized dynamically while online. It also provides device recovery primitives which massively simplify our rollouts and error recovery.

Because this integration happens at the Linux block layer, Fluid Storage can leverage existing OS-level mechanisms for resource control. Per-volume IOPS and throughput limits can be managed through the Linux environment—using cgroups or similar controls—allowing predictable performance isolation without requiring specialized kernel modifications. Additionally, we can also rely on kernel tools for monitoring and testing our block device.

Crucially, this design also means Fluid Storage inherits advances from the broader ecosystem. PostgreSQL 18 introduced support for Linux io_uring, which significantly improves asynchronous I/O throughput, and Linux continues to evolve its block and memory subsystems in similar ways. Because Fluid Storage operates at this layer, it already benefits from recent PostgreSQL and Linux improvements and will continue to inherit future performance gains automatically.

Within our Kubernetes infrastructure we seamlessly integrate with our existing orchestration software, because Fluid Storage is presented as a storage class. This enables operations such as resizing volumes or taking Postgres-consistent snapshots to work out-of-the-box, similar to how they function on EBS.

Putting these three layers together, one can trace read and write operations in the system. In this case, from the perspective of PostgreSQL backed by Fluid Storage as its underlying block storage.

Read Path

Write Path

While this describes the basic I/O flow, it omits the mechanisms that can improve performance and predictability, such as block caching at either the local instance (through the user-space device driver) or the storage proxy, and management of volume IOPS and throughput at both layers. These controls don’t alter the high-level behavior of Fluid Storage; they make the system more efficient, responsive, and predictable under varying workloads.

To PostgreSQL, Fluid Storage simply appears as a local disk. Underneath, it is a disaggregated storage system that scales elastically, replicates safely, and supports fast zero-copy forks. All while maintaining standard block storage semantics.

Architecture is meaningful only in what it enables. In Fluid Storage, the results appear along two dimensions: the technical properties that define how the system behaves, and the developer outcomes that emerge from them.

Forkability and snapshots: Snapshots are metadata-only and complete very quickly, regardless of volume size. Forks are writable snapshots: they start as zero-copy, then store just the blocks that are updated from its parent snapshot. Storage grows only as data diverges.

We ran microbenchmarks on the Fluid Storage layer to measure end-to-end latency for snapshot and fork creation as handled by the storage proxy, across volumes ranging from 1 GB to 100 GB. In all cases, both operations completed within roughly 500–600 milliseconds. These measurements exclude orchestration time (e.g., Kubernetes provisioning and mounting a new volume at a client) and any application-level coordination that may precede a snapshot, such as issuing a PostgreSQL checkpoint. They represent the raw efficiency of the underlying storage system—the baseline latency achievable for instant forks and snapshots under normal load.

Elasticity: Volumes expand and contract fluidly with workload changes, eliminating waste from unused allocation. Cluster throughput scales linearly with demand as demand grows, with I/O distributed across DBS block servers. Each volume is sharded across many block servers, reducing hotspots and enabling parallel reads, writes, and recovery.

Cost and efficiency: Fluid Storage’s multi-tenant design keeps utilization high across large numbers of colocated volumes. Unmodified blocks are shared across database forks and read replicas, so new forks or replicas consume only the incremental changes they write. Because the platform continuously reclaims and reuses space, it doesn’t need to over-allocate or reserve idle capacity. Usage is billed based on actual consumption, not provisioned size, and the efficiency of this architecture translates directly into lower cost for users.

| Latency - p50 (ms) | Latency - p99 (ms) | Throughput (IOPS) | Throughput (MB/s) | |

|---|---|---|---|---|

| Read (random) | 1.30 | 1.84 | 110,436 | 1377 |

| Read (seq) | 0.97 | 1.74 | 118,743 | 1375 |

| Write (random) | 5.3 | 7.9 | 67,137 | 689 |

| Write (seq) | 5.4 | 8.0 | 40,038 | 494 |

Benchmark results from fio workloads on a Fluid Storage cluster running in a standard production environment on AWS. Reads and writes are generated from user space using direct I/O to bypass the local page cache, exercising the full I/O path described in “Life of a Request.” Latency and IOPS benchmarks use 4 KB blocks, while throughput (MB/s) benchmarks use 512 KB blocks to emulate client-side write coalescing.

Performance: Latency remains low and stable across diverse client workloads, supported by asynchronous I/O and distributed sharding. Benchmarks from a standard Fluid Storage deployment in our production environment—run on production-scale client instances without IOPS rate limiting—demonstrate high and consistent performance.

As shown in the table above, a single Fluid Storage volume sustains read throughput exceeding 110,000 IOPS and 1.375 GB/s (with read throughput bottlenecked by network bandwidth in its current server configuration). It sustained write throughput between 40,000–67,000 IOPS and 500–700 MB/s. Single-block read latency is typically around 1 ms, and write latency around 5 ms. All writes are synchronously replicated in the DBS before returning to the client, ensuring durability without sacrificing stability.

These capabilities change how developers and agents teams build, test, and evolve their systems. In continuous integration and deployment (CI/CD) pipelines, every pull request can run against its own isolated database fork, eliminating the need to queue behind shared test environments. Schema migrations can be rehearsed safely on full-fidelity clones before any production change, reducing rollout risk while preserving realistic data fidelity. Analytics teams can create short-lived copies of production data (without having to actually copy data or pay for it twice), explore results, and discard them when finished.

The same primitives that let developers iterate faster also unlock new possibilities for agentic systems.

Agents can begin from a clean slate, spinning up an empty database for rapid prototyping, or start from a fork of production data to extend existing behavior or add new capabilities. Each agent operates on its own isolated fork, allowing parallel experiments and reasoning paths to run independently. Once experiments complete, results can be compared: a single branch promoted as the new primary, or code changes merged back into a production fork.

In this model, compute can become more ephemeral. Agents and workflows start instantly, run in isolation, and tear down when finished, leaving behind only snapshots that capture stable intermediate states. Snapshots become the natural unit of iteration, something to branch, backtrack, or extend as needed. Fluid Storage makes this loop—fork, test, recover, evolve—both fast and resource-efficient, turning operational data from a single artifact into a dynamic substrate for iteration.

In a world where workloads can self-orchestrate—spinning up, scaling, and shutting down autonomously—reliability must be continuous, not coordinated.

Reliability and Resilience

Fluid Storage is engineered for reliability through four independent layers of resilience—storage replication, database durability, compute recovery, and region-level isolation—each reinforcing the others to ensure consistent operation under failure. All of these mechanisms operate transparently; users never need to manage replicas, tune recovery, or coordinate failover.

1. Storage replication.

The blocks comprising each volume in Fluid Storage are synchronously replicated across multiple block servers within a DBS cluster. The system automatically detects and compensates for replica failures, rebalancing data and restoring full replication without operator intervention. The storage proxy continuously monitors block-server health, routing around failed nodes to maintain continuity. At the client boundary, the storage device driver retries I/O through its current proxy when transient failures occur, and reconnects to a different proxy if the existing connection is lost. These processes are fully automatic, ensuring strong consistency and continuous availability within the storage tier.

2. Database durability.

Beyond block-level replication, PostgreSQL durability is maintained through incremental backups and continuous WAL streaming. Each database retains enough WAL for arbitrary point-in-time recovery (PITR); even free services on Tiger Cloud provide 24-hour PITR. These backups and WAL segments are stored independently in S3, ensuring operational isolation from the active storage tier.

In the unlikely event of a Fluid Storage tier failure, new database volumes are automatically provisioned and restored from S3. The system also supports transparent migration between EBS-backed storage and Fluid Storage, allowing databases to move seamlessly across tiers if needed.

3. Compute recovery.

If a compute instance fails (the node running PostgreSQL itself), Tiger Cloud automatically provisions a replacement, reattaches the existing Fluid Storage volume, restarts PostgreSQL, and triggers it to replay its latest WAL segments. Recovery typically completes within tens of seconds, even for single-instance databases without HA replicas. Because the storage proxy maintains no client-side state, reconnection and recovery are immediate once the new compute is available, all without user action.

4. Region resilience.

Fluid Storage supports both single- and multi-availability zone (AZ) deployments for a single DBS cluster. In multi-AZ configurations, sharded replica sets are distributed across zones to tolerate AZ-level failures. These modes represent a tradeoff: cross-AZ replication improves resilience but increases latency and inter-AZ network costs.

Current deployments favor single-AZ clusters for lower latency and cost efficiency, while cross-AZ durability is achieved through PostgreSQL’s own high-availability replication. In this model, each database node in a multi-AZ cluster uses an independent Fluid Storage cluster in its respective zone. This design requires a full copy of storage per zone (at higher cost) but provides stronger fault isolation: each cluster operates with its own control plane, minimizing correlated failures across zones. This coordination is handled automatically by the system; the complexity is abstracted away from the user.

Availability and Access

Fluid Storage already serves customer-facing workloads within Tiger Cloud and powers all databases in our new free tier. Developers can create, pause, resume, fork, and snapshot databases directly through the cloud console, REST API, Tiger CLI, or the Tiger MCP server, making it easy to experiment with elastic and fork-first behavior in practice.

The system is available today as a public beta for the free tier, with larger-scale workloads being onboarded gradually through early-access programs. General availability will follow sustained operation across a broad set of customer environments, ensuring that Fluid Storage meets the standards of maturity, reliability, and performance expected of a core database storage platform.

Fluid Storage introduces our next-generation storage architecture: a distributed block layer that combines synchronous replication, true elasticity, and zero-copy forks. It delivers predictable performance, efficient scaling up or down, and rapid recovery. It does this all while remaining fully compatible with PostgreSQL and, because it operates at the block storage layer, also remaining fully compatible with other databases and file systems as well.

Every database in Tiger Cloud’s free tier now runs on Fluid Storage, giving developers direct access to these capabilities.

This foundation opens new ways to build, test, and extend data-driven systems. From faster iteration in developer workflows to more autonomous, agentic applications, Fluid Storage makes data as dynamic as the systems built on it. Because if agents are the new developers, storage must evolve to match their speed.

You can try Fluid Storage today through Tiger’s free services: get started instantly with the Tiger CLI and MCP Server.

And if you’re building an agentic or infrastructure platform and want to explore how Tiger’s databases powered by Fluid Storage can support your workloads, we’re opening early-access partnerships. Please get in touch to learn more.

TimescaleDB 2.22 & 2.23 – 90x Faster DISTINCT Queries, Postgres 18 Support, Configurable Columnstore Indexes, and UUIDv7 for Event-Driven Analytics

TimescaleDB 2.22 & 2.23: 90× faster DISTINCT queries, zero-config hypertables, UUIDv7 partitioning, Postgres 18 support, and configurable columnstore indexes.

Read more

Receive the latest technical articles and release notes in your inbox.