TimescaleDB 2.22 & 2.23 – 90x Faster DISTINCT Queries, Postgres 18 Support, Configurable Columnstore Indexes, and UUIDv7 for Event-Driven Analytics

TimescaleDB 2.22 & 2.23: 90× faster DISTINCT queries, zero-config hypertables, UUIDv7 partitioning, Postgres 18 support, and configurable columnstore indexes.

Read more

Spend more time improving your AI app and less time managing a database.

Start buildingTLDR: We built pg_textsearch for modern AI workloads: BM25 ranking, hybrid retrieval, and transactional simplicity all in one Postgres extension. Think Elasticsearch-quality relevance with native Postgres simplicity.

Preview Release

Search has evolved through distinct epochs, each defined by different needs and workloads.

The first epoch focused on human-facing catalog search: e-commerce product listings, documentation sites, content management systems. Users typed queries and expected relevant results from relatively static corpora. Postgres native full-text search, with its tsvector and tsquery types, has served this use case well for years. For applications with thousands to hundreds of thousands of documents, it provides fast keyword matching with basic relevance ranking across 29+ supported languages.

The second epoch arrived with high-volume operational data: server logs, metrics, events streaming in by the millions. Elasticsearch dominated here, offering not just search but aggregations, faceting, and the ability to ingest and query massive, constantly-changing datasets in near real-time. This workload demanded a different architecture: horizontal scaling, eventual consistency, and sophisticated write paths. Excellent tools like ParadeDB's pg_search are bringing similar capabilities to Postgres, integrating Tantivy to handle high-throughput log analysis and operational search within the database.

Now we're in a third epoch: AI-native applications. RAG systems, chat agents, and agentic workflows need search not for humans browsing catalogs or engineers querying logs, but for LLMs retrieving context. The corpus doesn't change as rapidly as streaming logs, but result quality is paramount: these systems need both semantic understanding from vector search and the precision of keyword matching. The two approaches are deeply complementary: vectors capture conceptual similarity while keywords ensure exact terms aren't missed.

This is where pg_textsearch comes in. We're announcing the preview release of a PostgreSQL extension built specifically for this third epoch, designed to be the best-in-class complement to vector search for AI applications. It implements modern BM25 ranking with the operational simplicity of Postgres, letting you build complete hybrid search systems in a single database alongside pgvector and pgvectorscale.

These AI applications share a critical requirement: retrieval quality directly determines output quality. When a RAG system pulls mediocre documents instead of the best matches, the LLM generates mediocre responses. The challenge is that Postgres native full-text search lacks the ranking signals needed to consistently surface the most relevant results.

Consider building a RAG system that searches technical documentation to answer user questions. A user asks about "database connection pooling." With Postgres’ native ts_rank, documents that mention "database" 50 times but barely discuss “pooling” rank higher than a comprehensive pooling guide that only mentions "database" 5 times.

This happens because ts_rank lacks the corpus-aware signals that modern search uses. It doesn't calculate inverse document frequency (IDF), so common words receive the same weight as rare, meaningful terms. It doesn't apply term frequency saturation, allowing documents that repeat keywords excessively to dominate rankings. It doesn't normalize by corpus-average document length, causing longer documents to score higher regardless of actual relevance.

There's also a brittleness problem with Postgres' Boolean matching approach. GIN and GiST indexes accelerate searches through the @@ operator, which requires all query terms to appear in a document for it to match. A highly relevant document missing just one query term gets excluded entirely before ranking begins. For a query like "database connection pooling management," a definitive guide to connection pooling that never uses the word "management" won't appear in results at all—you lose potentially excellent matches due to strict Boolean requirements.

For AI applications where an LLM depends on retrieving the right context, these ranking deficiencies directly impact output quality. The model generates responses based on whatever documents the search returns. If mediocre matches rank above excellent ones, the entire system suffers.

BM25 addresses these issues through three key improvements: (i) IDF weighting identifies discriminating terms, (ii) term frequency saturation prevents keyword stuffing from gaming rankings, and (iii) length normalization ensures fair comparison between short and long documents. These aren't exotic features—they're the baseline for quality search, proven across decades of information retrieval research.

Performance matters too, particularly as the corpora grow. Postgres' native search also runs into scaling and performance issues. Postgres must score every matching document to rank results; there's no efficient way to retrieve just the top-k most relevant documents without examining the full match set. When searching for common terms that match millions of rows, this becomes prohibitively expensive. The indexes (GIN/GiST) help find matches but provide no assistance with ranking them.

Teams report queries degrading from under 1 second to 25-30 seconds on just 800,000 rows when using ts_rank for relevance sorting (sql - Postgres Full Text Search - Issues with Query Speed - Stack Overflow), with significant performance deterioration around 1-2 million rows (Full text search in milliseconds with PostgreSQL | May 01, 2015). As one analysis noted, ranking requires consulting the tsvector of each matching document, which becomes I/O bound and slow at scale. For AI applications, getting the ranking right matters more than raw speed—a correct top-10 in 100ms beats a mediocre top-10 in 10ms—but the truth is that at scale you need both state-of-the-art performance and great quality results.

BM25 addresses these ranking quality issues directly. It improves on basic keyword matching through three key techniques proven over decades of information retrieval research:

These techniques are well-established and widely deployed. Modern search engines use hierarchical index architectures (LSM-tree style) to avoid expensive random disk I/O, compression techniques like delta encoding to reduce storage by 70-90%, and advanced query algorithms like Block-Max WAND to find top results without scoring every document.

pg_textsearch brings these proven approaches to Postgres, but takes a focused approach: implementing excellent BM25 ranking rather than recreating a full search platform. Search libraries like Lucene (powering Elasticsearch and Solr) and Tantivy (powering Meilisearch and Quickwit) have evolved to include faceted search, geo-spatial queries, and complex aggregations—features Postgres already provides through other means. We concentrate on what's missing: modern ranked keyword search optimized for hybrid AI applications alongside pgvector.

The current preview release uses an in-memory structure for fast writes and queries. Future releases will add disk-based segments with compression and Block-Max WAND optimization—applying the same battle-tested techniques from production search engines.

Let's walk through using pg_textsearch on Tiger Cloud, starting from the basics.

First, enable pg_textsearch in your database:

CREATE EXTENSION pg_textsearch;

That's it; no configuration files or external services. The extension is ready to use.

Let's say you have a table of articles:

CREATE TABLE articles (

id SERIAL PRIMARY KEY,

title TEXT,

content TEXT,

published_date DATE

);

-- Insert some sample data

INSERT INTO articles (title, content, published_date) VALUES

('Getting Started with Postgres',

'Postgres is a powerful relational database system known for its reliability and feature set...',

'2024-01-15'),

('Understanding Indexes',

'Database indexes are data structures that improve query performance by enabling fast lookups...',

'2024-02-20'),

('Advanced Query Optimization',

'Query optimization involves choosing the most efficient execution plan for a given query...',

'2024-03-10');Create a BM25 index on the content column:

CREATE INDEX articles_content_idx ON articles

USING bm25(content)

WITH (text_config='english');

The index is fully transactional and automatically maintained by Postgres. When you UPDATE or DELETE rows, the index updates instantly: no separate sync jobs, no eventual consistency, no data pipelines to monitor. This is fundamentally different from external search systems where keeping your search index in sync with your database becomes a complex distributed systems problem.

The text_config parameter specifies which Postgres text search configuration to use for tokenization and stemming. (Here we chose to piggyback on Postgres’ mature and comprehensive support for tokenization, by using the standard tsvector under the covers). Common options include 'english', 'simple' (no stemming), 'french', 'german', and 29 others built into Postgres.

For smaller datasets (up to around 100,000 documents), expect indexing to complete in a second or two, with queries returning in just a millisecond or two. The preview release keeps everything in memory, so performance is consistently fast for workloads that fit within the configured memory limit (default 64MB per index).

Search for articles about "database performance":

SELECT id, title,

content <@> to_bm25query('database performance', 'articles_content_idx') AS score

FROM articles

ORDER BY score

LIMIT 5;Key points:

<@> “eyeball” operator calculates BM25 scores between text and a query.to_bm25query() creates a query object with the index name for proper IDF calculation.bm25vector type to store term frequencies with index context.pg_textsearch integrates seamlessly with standard SQL:

-- Filter by date and relevance threshold

SELECT title, published_date

FROM articles

WHERE published_date > '2024-01-01'

AND content <@> to_bm25query('query optimization', 'articles_content_idx') < -1.5

ORDER BY content <@> to_bm25query('query optimization', 'articles_content_idx');

-- Group results by month

SELECT DATE_TRUNC('month', published_date) AS month,

COUNT(*) AS matching_articles,

AVG(content <@> to_bm25query('indexes', 'articles_content_idx')) AS avg_score

FROM articles

WHERE content <@> to_bm25query('indexes', 'articles_content_idx') < -0.5

GROUP BY month

ORDER BY month DESC;

-- Join with other tables (example assumes you have a users table)

-- First create the users table and add author_id to articles:

-- ALTER TABLE articles ADD COLUMN author_id INTEGER;

-- CREATE TABLE users (id INTEGER PRIMARY KEY, name TEXT);

SELECT a.title, u.name AS author,

a.content <@> to_bm25query('postgres tips', 'articles_content_idx') AS score

FROM articles a

JOIN users u ON a.author_id = u.id

ORDER BY score

LIMIT 10;Modern search systems increasingly combine semantic vector search with keyword matching for optimal results. Vector embeddings capture conceptual similarity but can miss exact terms that matter ("PostgreSQL 17.2" vs "PostgreSQL 17.1"). Keyword search provides precision for exact matches but lacks semantic understanding. The best results come from using both together. This hybrid approach has proven effective across major search platforms including Elasticsearch, OpenSearch, and Azure AI Search.

Here's how to build hybrid search with pgvector and pg_textsearch:

-- Create a table with both text content and vector embeddings

CREATE TABLE documents (

id SERIAL PRIMARY KEY,

title TEXT,

content TEXT,

embedding vector(1536) -- OpenAI ada-002 dimension

);

-- Create both indexes

CREATE INDEX documents_embedding_idx ON documents

USING hnsw (embedding vector_cosine_ops);

CREATE INDEX documents_content_idx ON documents

USING pg_textsearch(content)

WITH (text_config='english');

-- Insert sample data

-- Note: In practice, embeddings come from your embedding model (e.g., OpenAI API)

-- Here we use a placeholder array of 1536 zeros for illustration

INSERT INTO documents (title, content, embedding) VALUES

('Database Performance',

'Optimizing query performance in Postgres requires understanding indexes...',

ARRAY_FILL(0.0, ARRAY[1536])::vector),

('Machine Learning Systems',

'Building scalable ML systems requires careful attention to data pipelines...',

ARRAY_FILL(0.0, ARRAY[1536])::vector);One popular method for combining results is Reciprocal Rank Fusion, introduced by Cormack et al. at SIGIR 2009. RRF provides a simple, parameter-free way to merge rankings from multiple sources. While you can implement RRF directly in SQL as shown below, it's equally valid—and sometimes preferable—to handle this logic in your application code:

-- Hybrid search combining vector and keyword results

-- Note: Replace $1 with your actual query embedding from your embedding model

WITH vector_search AS (

SELECT id,

ROW_NUMBER() OVER (ORDER BY embedding <=> $1::vector) AS rank

FROM documents

ORDER BY embedding <=> $1::vector

LIMIT 20

),

keyword_search AS (

SELECT id,

ROW_NUMBER() OVER (ORDER BY content <@> to_bm25query('query performance', 'documents_content_idx')) AS rank

FROM documents

ORDER BY content <@> to_bm25query('query performance', 'documents_content_idx')

LIMIT 20

)

SELECT

d.id,

d.title,

COALESCE(1.0 / (60 + v.rank), 0.0) + COALESCE(1.0 / (60 + k.rank), 0.0) AS combined_score

FROM documents d

LEFT JOIN vector_search v ON d.id = v.id

LEFT JOIN keyword_search k ON d.id = k.id

WHERE v.id IS NOT NULL OR k.id IS NOT NULL

ORDER BY combined_score DESC

LIMIT 10;

This query:

The RRF formula 1 / (k + rank) rewards documents that appear high in both result sets while still including documents that excel in just one.

Adjust the relative importance of vector vs keyword search:

-- Using the same CTEs from above, adjust the weights in the final SELECT:

WITH vector_search AS ( ... ), -- same as above

keyword_search AS ( ... ) -- same as above

SELECT

d.id,

d.title,

0.7 * COALESCE(1.0 / (60 + v.rank), 0.0) + -- 70% weight to vectors

0.3 * COALESCE(1.0 / (60 + k.rank), 0.0) -- 30% weight to keywords

AS combined_score

FROM documents d

LEFT JOIN vector_search v ON d.id = v.id

LEFT JOIN keyword_search k ON d.id = k.id

WHERE v.id IS NOT NULL OR k.id IS NOT NULL

ORDER BY combined_score DESC

LIMIT 10;The SQL examples above implement RRF for illustration, but production systems often require more sophisticated approaches. The paper by Bruch, Gai, and Ingber provides a good overview of fusion methods and their trade-offs. Common approaches include:

The choice depends on your latency requirements, result quality needs, and available computational resources. Simple score fusion like RRF works well for many applications, while systems requiring maximum relevance might justify the computational cost of neural rerankers.

Regardless of the fusion method, hybrid search with pg_textsearch and pgvector gives you both keyword precision and semantic understanding from a single Postgres instance—particularly valuable for RAG systems and other AI applications.

WARNING: Gory technical details ahead! Feel free to skip ahead to try pg_textsearch today to get started using it.

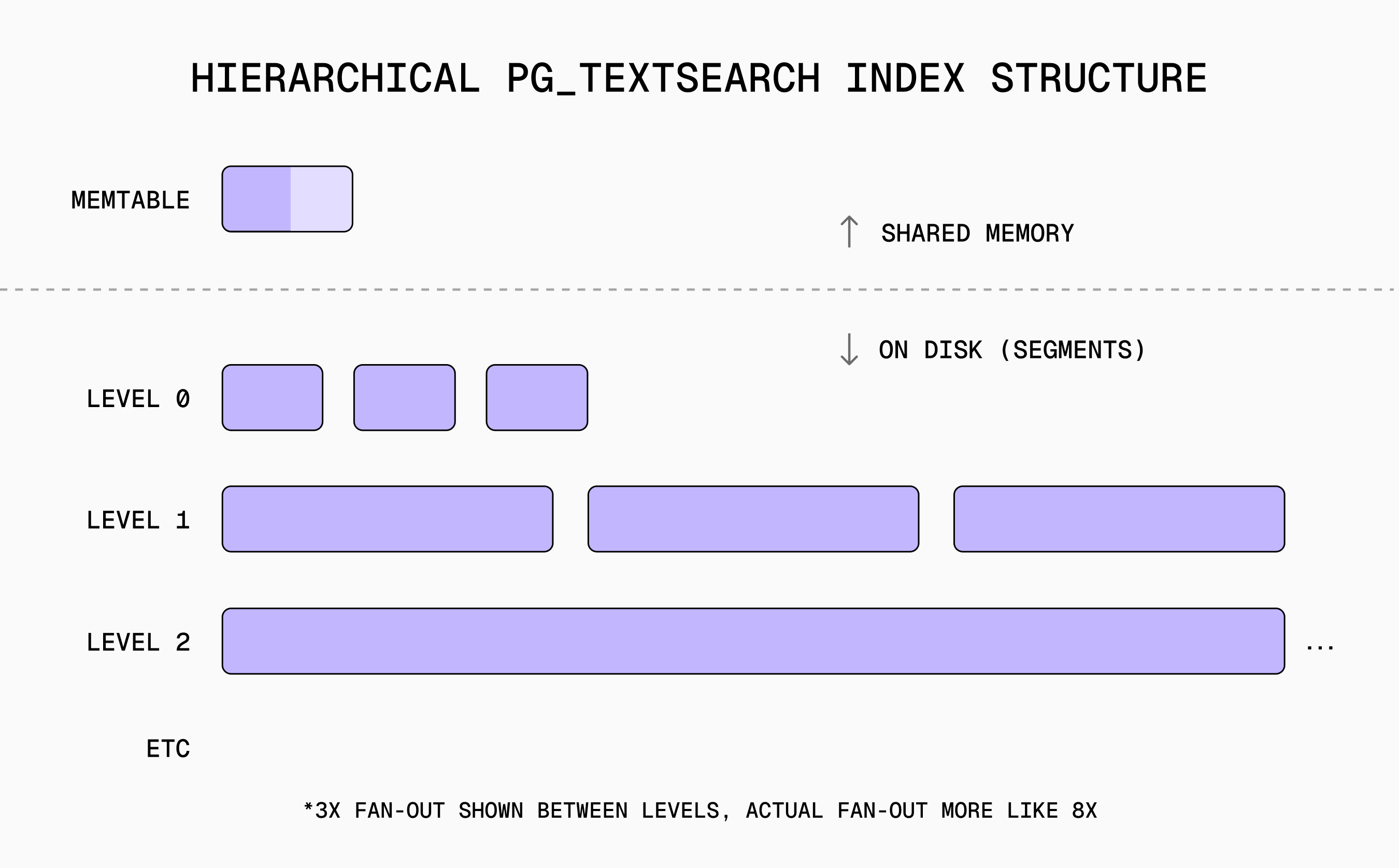

The preview release focuses on supporting the full user surface but backed only by the memtable component: fast, simple, and sufficient for small workloads. The full hierarchical architecture with disk segments will be released in stages over the coming months.

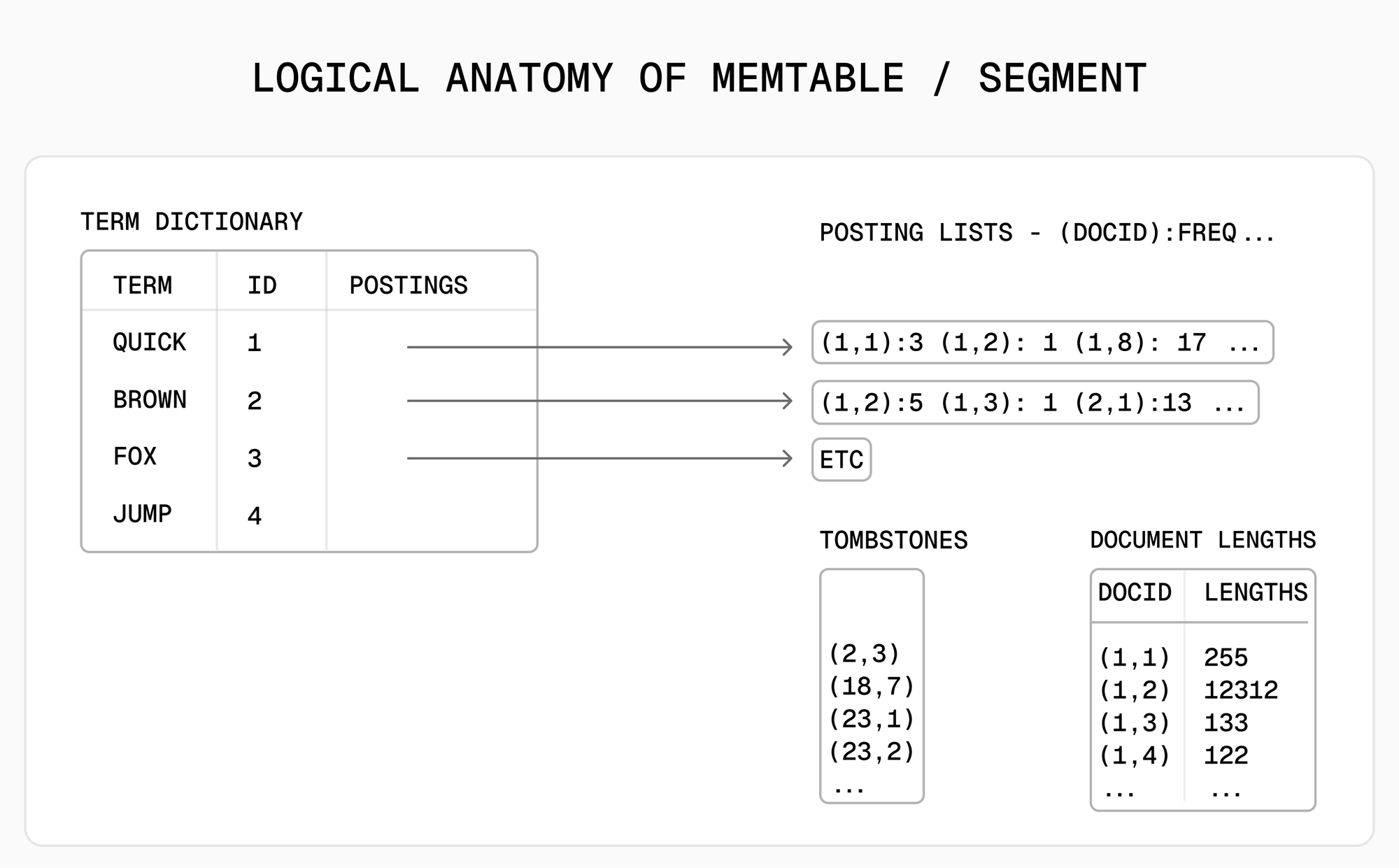

The implementation leverages Postgres Dynamic Shared Areas (DSA) to build an inverted index in shared memory. This allows all backend processes to share a single index structure, avoiding duplication and enabling efficient memory use. The index consists of three core components:

A term dictionary implemented as a DSA hash table with string interning. Each unique term maps to its posting list pointer and document frequency for IDF calculation. String interning ensures each term appears exactly once in memory, regardless of how many documents contain it.

Posting lists stored as dynamically-growing vectors in DSA memory. Each entry contains a document identifier (Postgres TID) and term frequency. Lists remain unsorted during insertion for write performance, then sort on-demand for query processing. This design optimizes for the common case of sequential writes followed by read queries.

Document metadata tracking each document's length and state. The length enables BM25's document normalization. The state supports crash recovery: on restart, the index rebuilds by rescanning documents marked as indexed. Corpus-wide statistics (document count, average length) live here too, updated incrementally as documents are added or removed.

The preview release implements just the memtable layer—the top of the hierarchical structure described earlier. This is intentional: we're releasing in stages to get working software into users' hands quickly while building toward the complete system.

Next milestone: Naive disk segments. When the memtable fills, it flushes to an immutable on-disk segment. Queries merge results from the memtable and all segments. This removes the memory limitation while keeping the implementation straightforward.

Following milestone: Optimized segments with compression. Delta encoding for posting lists, skip lists for faster intersection, and basic query optimization. This brings significant performance improvements for larger indexes.

Future milestone: Background compaction and advanced query algorithms. A background worker merges small segments into larger ones, maintaining optimal query performance. Block-Max WAND enables efficient top-k retrieval without scoring all documents.

Each release maintains backward compatibility. That is, indexes created with earlier versions will continue working as you upgrade.

When you execute a BM25 query:

SELECT * FROM documents

ORDER BY content <@> to_bm25query('database performance', 'docs_idx')

LIMIT 10;

The extension evaluates it in several steps. First, it looks up each query term in the dictionary to retrieve posting lists and IDF values. Then, for documents appearing in any posting list, it computes the BM25 score by summing each term's contribution: IDF × normalized term frequency × length normalization. The normalization factors come from the k1 and b parameters, using the corpus statistics maintained in the index.

pg_textsearch is available now on Tiger Cloud for all customers. You can even try it out with our brand-new free plan.

Get started with the following commands:

curl -fsSL https://cli.tigerdata.com | sh

tiger auth login

tiger service create #if you don't already have one

tiger db connect

(We also have alternative installation instructions for the CLI tool)

Then enable the extension:

CREATE EXTENSION pg_textsearch;

Full documentation for the extension is available here.

This is a preview release, and your feedback will shape the final product. Tell us about your use cases, report bugs, and request features. You can use the “Feature Requests” or “Help and Support -> Send Feedback to the Product Team” options from the Tiger Cloud console.

We're particularly interested in hearing from developers building:

About the authors

Todd J. Green

T.J. Green is a Senior Staff Engineer at Tiger Data, creators of TimescaleDB, where he is the implementor of pg_textsearch, a new Postgres extension for high-performance BM25 ranked text search. He brings deep expertise in database systems internals and indexing methods to this project. At Tiger Data, he has also contributed to pgvectorscale, the company's vector search extension for Postgres.

T.J. has over two decades of experience building database systems at leading technology companies including AWS, where he was a Principal Engineer working on core database infrastructure, and Pinecone, where he focused on vector search systems. His career spans positions at RelationalAI, LogicBlox, Microsoft, and Xyleme, with expertise ranging from query processing and optimization to distributed systems engineering. He has also taught database systems courses at both UC Davis and UC Berkeley.

T.J. holds a Ph.D. in Computer Science from the University of Pennsylvania, where his research focused on query languages and data provenance. His dissertation won the Morris and Dorothy

Rubinoff Award and received an honorable mention for the Jim Gray SIGMOD dissertation award. His academic honors also include an NSF CAREER award and a PODS 10-year test-of-time award. When not building write-optimized data structures, T.J. can be found in Davis, California with his wife, three kids, three dogs, and three chickens—or playing guitar at the Jazzschool in Berkeley on Tuesday nights.

Matvey Arye

Matvey Arye is a founding engineering leader at Tiger Data (creators of TimescaleDB), the premiere provider of relational database technology for time-series data and AI. Mat leads the team at Tiger Data responsible for building the go-to developer platform for AI applications.

Under his leadership, the Tiger Data engineering team has introduced partitioning, compression, and incremental materialized views for time-series data, plus cutting-edge indexing and performance innovations for AI.

Matvey earned a Bachelor degree in Engineering at The Cooper Union. He earned a Doctorate in Computer Science at Princeton University where his research focused on cross-continental data analysis covering issues such as networking, approximate algorithms, and performant data processing.

Receive the latest technical articles and release notes in your inbox.