14 min read

Sep 08, 2022

Table of contents

01 Definition: Time-Series Forecasting02 Time-Series Forecasting Examples03 When Is Time-Series Forecasting Useful?04 What to Consider When You Do Time-Series Forecasting05 Time-Series Forecasting Techniques06 Time-Series Decomposition07 Time-Series Regression Models08 Exponential Smoothing09 ARIMA Models10 Neural Networks11 TBATS12 ConclusionAs time-series data becomes ubiquitous, measuring change is vital to understanding the world. Here at Timescale, we use our PostgreSQL and TimescaleDB superpowers to generate insights into the data to see what and how things changed and when they changed—that’s the beauty of time-series data.

But, if you have data showing past and present trends, can you predict the future? This is where time-series forecasting models come into play, helping us make accurate predictions based on historical patterns.

In this guide, we explore forecasting models for time series, tools, and techniques for effective predictions.

Time-series forecasting, a technique for predicting future values based on historical data, is essential for tasks like demand forecasting, financial analysis, and operational planning. By analyzing data that we stored in the past, we can make informed decisions that can guide our business strategy and help us understand future trends.

Some of you may be asking yourselves what the difference is between time-series forecasting and algorithmic predictions using, for example, machine learning. Well, machine-learning techniques such as random forest, gradient boosting regressor, and time delay neural networks can help extrapolate temporal data, but they are far from the only available options or the best ones (as you will see in this article).

The most important property of a time-series algorithm is its ability to extrapolate patterns outside of the domain of training data, which most machine-learning techniques cannot do by default. This is where specialized time-series forecasting techniques come in.

There are many approaches to forecasting time-series data, including advanced models like ARIMA, machine learning-based techniques, and modern deep learning methods. We'll explore these prediction methods for time series and provide practical examples.

Let’s check them out.

Many industries and scientific fields utilize time-series forecasting. Examples:

The list is already quite long, but anyone with access to accurate historical data can utilize time-series analysis methods to forecast future developments and trends.

Even though time-series forecasting may seem like a universally applicable technique, developers need to be aware of some limitations. Because forecasting isn’t a strictly defined method but rather a combination of data analysis techniques, analysts and data scientists must consider the limitations of the prediction models and the data itself.

The most crucial step when considering time-series forecasting is understanding your data model and knowing which business questions need to be answered using this data. By diving into the problem domain, a developer can more easily distinguish random fluctuations from stable and constant trends in historical data. This is useful when tuning the prediction model to generate the best forecasts and even considering the method to use.

When using time-series analysis, you must consider some data limitations. Common problems include generalizing from a single data source, obtaining appropriate measurements, and accurately identifying the correct model to represent the data.

There are quite a few factors associated with time-series forecasting, but the most important ones include the following:

Here’s an overview of the most popular time-series forecasting models, from statistical methods like ARIMA to advanced approaches using neural networks:

Time-series decomposition is a method for explicitly modeling the data as a combination of seasonal, trend, cycle, and remainder components instead of modeling it with temporal dependencies and autocorrelations. It can either be performed as a standalone method for time-series forecasting or as the first step in better understanding your data.

When using a decomposition model, you need to forecast future values for each component above and then sum these predictions to find the most accurate overall forecast. The most relevant decomposition forecasting techniques using decomposition are Seasonal-Trend decomposition using LOESS, Bayesian structural time series (BSTS), and Facebook Prophet.

Decomposition based on rates of change is a technique for analyzing seasonal adjustments. This technique constructs several component series, which combine (using additions and multiplications) to make the original time series. Each of the components has a specific characteristic or type of behavior. They usually include:

Additive decomposition: Additive decomposition implies that time-series data is a function of the sum of its components. This can be represented with the following equation:

yt = Tt + Ct + St + It

where yt is the time-series data, Tt is the trend component, Ct is the cycle component, St is the seasonal component, and It is the remainder.

Multiplicative decomposition: Instead of using addition to combine the components, multiplicative decomposition defines temporal data as a function of the product of its components. In the form of an equation:

yt = Tt * Ct * St * It

The question is how to identify a time series as additive or multiplicative. The answer is in its variation. If the magnitude of the seasonal component is dynamic and changes over time, it’s safe to assume that the series is multiplicative. If the seasonal component is constant, the series is additive.

Some methods combine the trend and cycle components into one trend-cycle component. It can be referred to as the trend component even when it contains visible cycle properties. For example, when using seasonal-trend decomposition with LOESS, the time series is decomposed into seasonal, trend, and irregular (also called noise) components, where the cycle component is included in the trend component.

Time-series regression is a statistical method for forecasting future values based on historical data. The forecast variable is also called the regressand, dependent, or explained variable. The predictor variables are sometimes called the regressors, independent, or explanatory variables. Regression algorithms attempt to calculate the line of best fit for a given dataset. For example, a linear regression algorithm could try to minimize the sum of the squares of the differences between the observed value and predicted value to find the best fit.

Let’s look at one of the simplest regression models, simple linear regression. The regression model describes a linear relationship between the forecast variable y and a simple predictor variable x:

yt = β0 + β1 * xt + εt

The coefficients β0 and β1 denote the line's intercept and slope. The slope β1 represents the average predicted change in y resulting from a one-unit increase in x:

It’s important to note that the observations aren’t perfectly aligned on the straight line but are somewhat scattered around it. Each of the observations yt is made up of a systematic component of the model (β0 + β1 * xt ) and an “error” component (εt). The error component doesn’t have to be an actual error; the term encompasses any deviations from the straight-line model.

As you can see, a linear model is very limited in approximating underlying functions, which is why other regression models, like Least squares estimation and Nonlinear regression, may be more useful.

When it comes to time-series forecasting, data smoothing can tremendously improve the accuracy of our predictions by removing outliers from a time-series dataset. Smoothing leads to increased visibility of distinct and repeating patterns hidden between the noise.

Exponential smoothing is a rule-of-thumb technique for smoothing time-series data using the exponential window function. Whereas the simple moving average method weighs historical data equally to make predictions for time series, exponential smoothing uses exponential functions to calculate decreasing weights over time. Different types of exponential smoothing include simple exponential smoothing and triple exponential smoothing (also known as the Holt-Winters method).

AutoRegressive Integrated Moving Average, or ARIMA, is a forecasting method that combines both an autoregressive model and a moving average model. Autoregression uses observations from previous time steps to predict future values using a regression equation. An autoregressive model utilizes a linear combination of past variable values to make forecasts:

An autoregressive model of order p can be written as:

yt = c + ϕ1yt-1 + ϕ2yt−2 + ⋯ + ϕpyt−p + εt

where εt is white noise. This form is like a multiple regression model but with delayed values of yt as predictors. We refer to this as an AR(p) model, an autoregressive model of order p.

On the other hand, a moving average model uses a linear combination of forecast errors for its predictions:

yt = c + εt + θ1εt−1 + θ2εt−2 + ⋯ + θqεt−q

where εt represents white noise. We refer to this as an MA(q) model, a moving average model of order q. The value of εt is not observed, so we can’t classify it as a regression in the usual sense.

If we combine differencing with autoregression and a moving average model, we obtain a non-seasonal ARIMA model. The complete model can be represented with the following equation:

y′t = c + ϕ1y′t−1 + ⋯ + ϕpy′t−p + θ1εt−1 + ⋯ + θqεt−q + εt

where y′t is the differenced series (find more on differencing here). The “predictors” on the right-hand side combine the lagged values yt and lagged errors. The model is called an ARIMA( p, d, q) model. The model's parameters are:

The SARIMA model (Seasonal ARIMA) is an extension of the ARIMA model. This extension adds a linear combination of seasonal past values and forecast errors.

Neural networks have rapidly become a go-to solution for time-series forecasting, offering powerful tools for classification and prediction in scenarios with complex data relationships. A neural network can sufficiently approximate any continuous functions for time-series forecasting.

Unlike classical models like ARMA or ARIMA, which rely on linear assumptions between inputs and outputs, neural networks adapt to nonlinear patterns, making them suitable for a wider range of data types. This means they can approximate any nonlinear function without prior knowledge about the properties of the data series.

One common neural network architecture for forecasting is the Multilayer Perceptron (MLP), which uses layers of interconnected neurons to approximate complex functions and relationships within data. Beyond MLPs, Recurrent Neural Networks (RNNs) and Long Short-Term Memory networks (LSTMs) are particularly useful for sequential data like time series, as they maintain memory of previous steps, capturing trends over time.

Key benefits include:

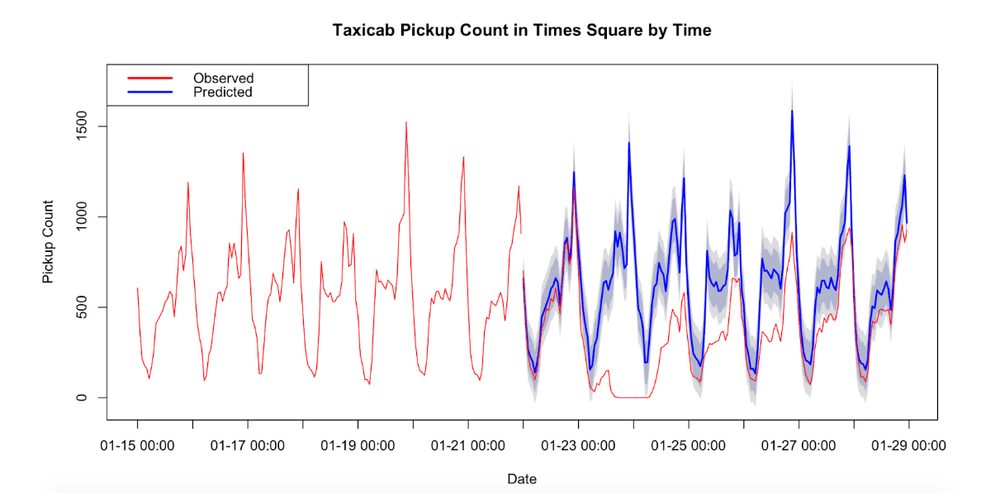

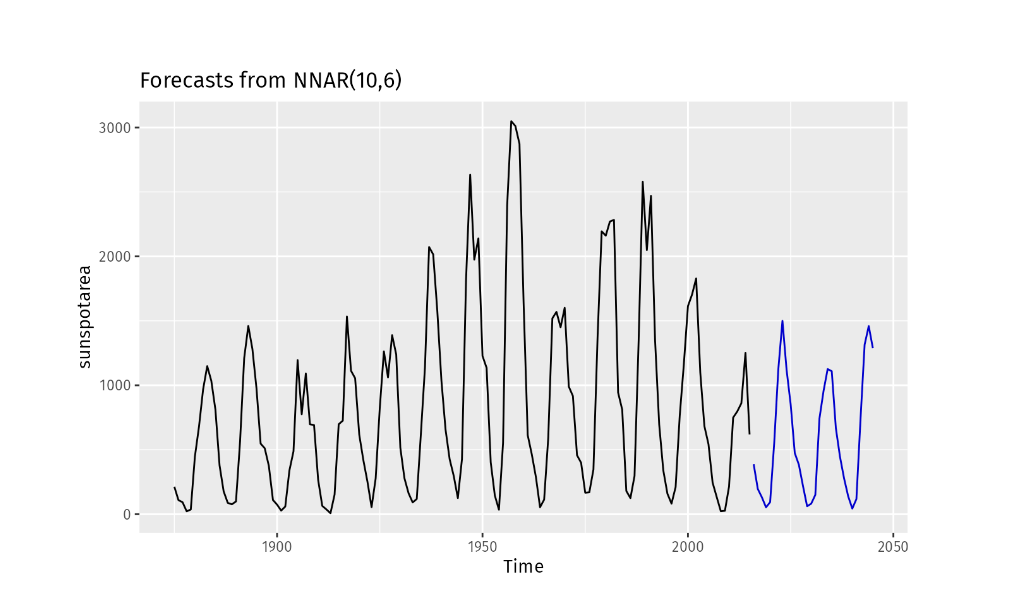

In the figure below, a neural network with ten lagged inputs and a single hidden layer of six neurons is used for time-series forecasting.

Time-series data often contains intricate seasonal patterns that evolve across various time frames, such as daily, weekly, or yearly trends. Traditional models like ARIMA and exponential smoothing usually capture only a single seasonality, limiting their effectiveness for complex series. The TBATS model addresses this limitation by accounting for multiple, non-nested, and even non-integer seasonal patterns, ideal for long-term and complex forecasting tasks.

TBATS stands for Trigonometric seasonality, Box-Cox transformation, ARIMA errors, Trend, and Seasonal components, each of which adds a layer of precision to forecasts:

In this figure, a TBATS model is applied to forecast call volume data, demonstrating its ability to capture complex seasonal trends.

However, as shown, TBATS often produces wide prediction intervals that may be overly conservative. This occurs because TBATS models handle diverse seasonality but can sometimes overestimate uncertainty in long-term forecasts, especially with noisy or highly fluctuating data.

Choosing the right tools for time-series forecasting is key to achieving accurate predictions. Popular tools include Python libraries like statsmodels, Prophet, and TensorFlow, which support building robust time-series prediction models for various use cases. Here are some of the most popular and effective ones:

TimescaleDB is a relational database built on PostgreSQL, optimized for time-series data. It provides features like automatic data partitioning (hypertables), continuous aggregations, and advanced compression. With SQL as its query language, it empowers developers to analyze time-series data without learning a new query paradigm.

Apache Druid is a database designed for workflows that require fast aggregation and querying of time-stamped events. It supports flexible ingestion methods, making it suitable for log and event analytics. Druid excels in scenarios with high concurrency and low latency requirements.

While not a database, Kafka is a powerful tool for handling streaming time-series data. It’s commonly used as a pipeline for ingesting, processing, and distributing time-stamped events across systems. (See how you can build an IoT pipeline for real-time analytics using Kafka.)

Python provides a rich ecosystem for time-series analysis:

Effective visualization is key to understanding time-series data:

For machine learning on time-series data, frameworks like TensorFlow and PyTorch offer pre-built models and flexibility for custom solutions. Additionally, libraries like tslearn focus specifically on time-series machine learning.

The choice of tools depends on your use case. For developers needing SQL-based analytics, high performance, and scalability, TimescaleDB stands out. For high-frequency monitoring or custom machine learning pipelines, Druid or Python’s libraries may be more suitable. By understanding the strengths of these tools, you can build robust solutions tailored to your specific time-series challenges.

By leveraging time-series forecasting examples and prediction methods for time series, businesses can make data-driven decisions that improve efficiency and outcomes.

Time-series forecasting holds tremendous value for business development as it leverages historical data with a time component. While there are many forecasting methods to choose from, most are focused on specific situations and types of data, making it relatively easy to choose the right one.

If you are interested in time-series forecasting, look at this tutorial about analyzing Cryptocurrency market data. Using a time-series database like TimescaleDB, you can ditch complex analysis techniques that require a lot of custom code and instead use the SQL query language to generate insights.

Receive the latest technical articles and release notes in your inbox.